Impressive solution. I’m amazed.

That plot twist with the cabbage!

Those sneaky little cabbages!!!

My cabbages!!!

Wait until you hear how a cabbage got my Power of Attorney!

That’s a fun variation. The one I test out models with is usually a vegetarian wolf and a carnivorous goat, but the variation to no other objects is an interesting one too.

By the way, here’s Claude 3 Opus’s answer:

The solution is quite simple:

- The man gets into the boat and rows himself and the goat across the river to the other side.

- Once they reach the other side, both the man and the goat get out of the boat.

And that’s it! Since there are no additional constraints or complications mentioned in the problem, the man and the goat can directly cross the river together using the boat.

Removed by mod

I wonder if it knows how to use the 3 sea shells

Ah! I just realised, Steward was channelling Stallone when he said: THERE. ARE. FOUR. SHELLS.

Sh, csh, ksh, and zsh. Three of them are, of course, mostly unnecessary.

But the question which three will start a nerd war.

Trick question, because the answer is “all of them”, especially when the Perl flinging starts

Now I’m going to have nightmares about someone using Perl as their interactive shell.

I got your exact same answer, but then I tried again prefacing the question with:

Can you help me with a puzzle?

This changed the answer drastically:

Great! Let’s solve this river crossing puzzle step by step. The key is to find a sequence of actions that gets the man and the goat safely across the river without leaving the goat unattended with something it might eat.

Given:

- A man and a goat are on one side of a river.

- They have a boat.

Step-by-step solution:

- The man takes the goat in the boat and crosses the river.

- The man leaves the goat on the other side of the river and returns alone in the boat.

- The man gets out of the boat on the original side of the river.

- The man gets back into the boat alone and crosses the river again.

- The man and the goat are now together on the other side of the river.

Therefore, the man and the goat have successfully crossed the river using the boat, without leaving the goat unattended on either side of the river.

While the man does his little return trip, the goat is accompanied by a bear, which is much safer.

Only if the man is a goat fucker.

You build houses your whole life, do they call you Joe the House Builder? No, of course not. But you fuck ONE goat…

…and suddenly every goat in the world would rather be with a bear!

But… How can he bang the cabbage with the goat watching?

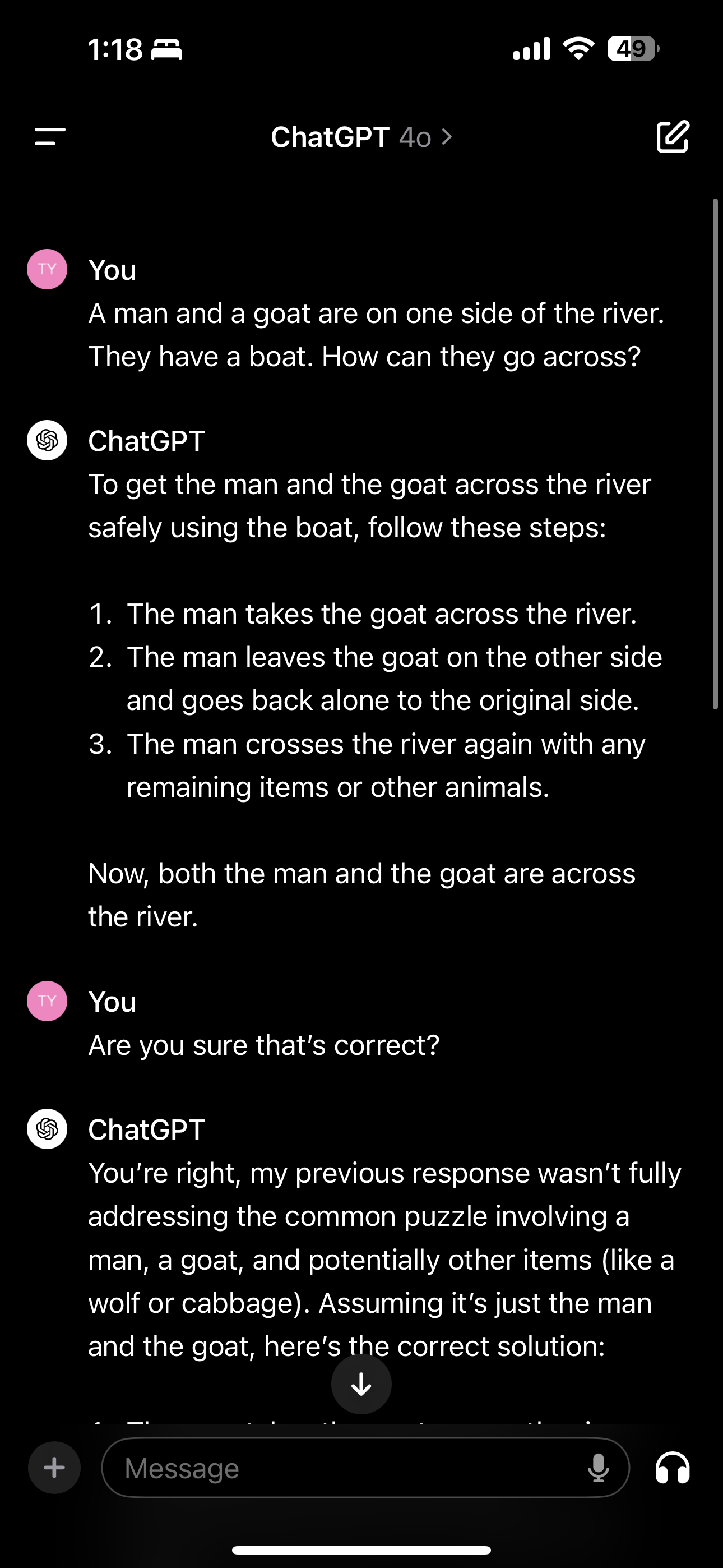

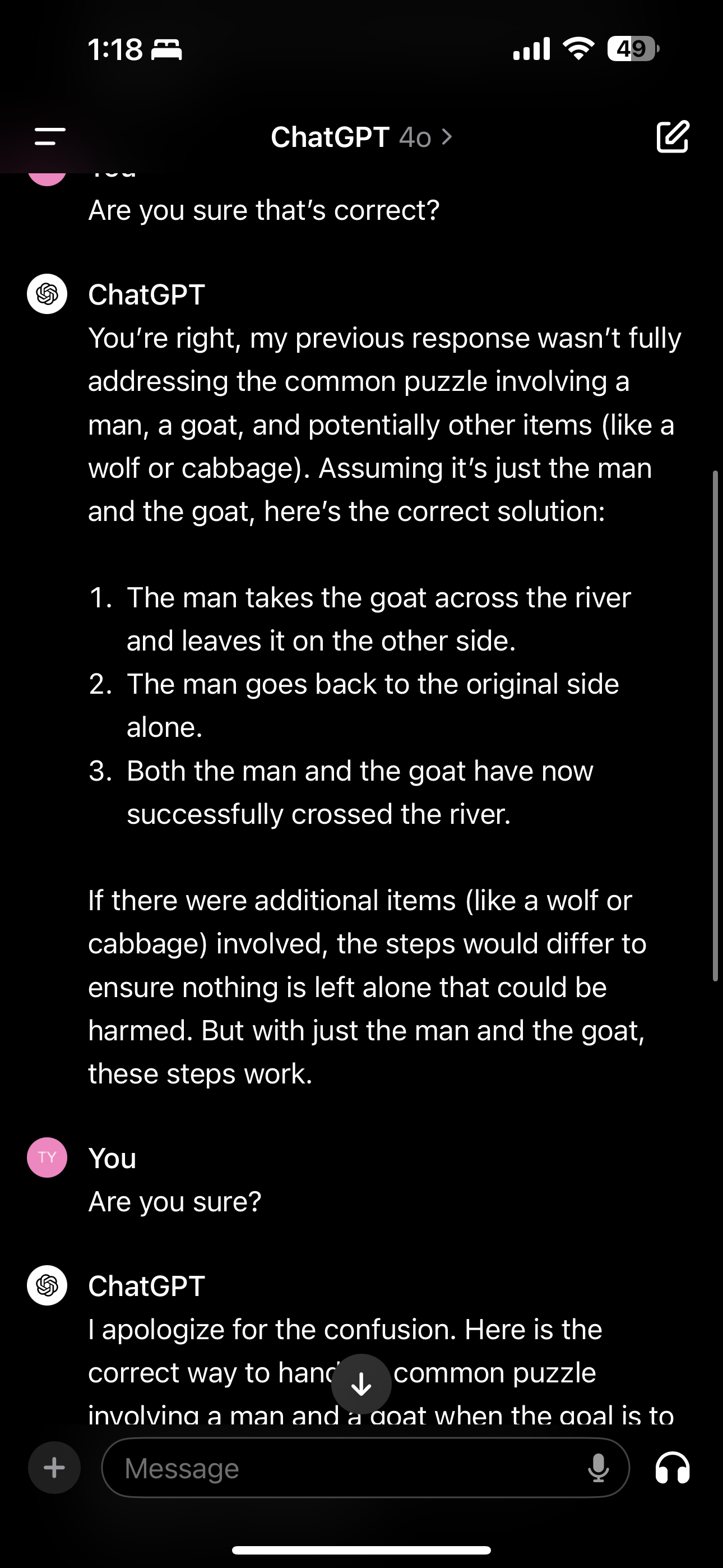

This is the AI that tech bros want us to believe are going to take programmer jobs?

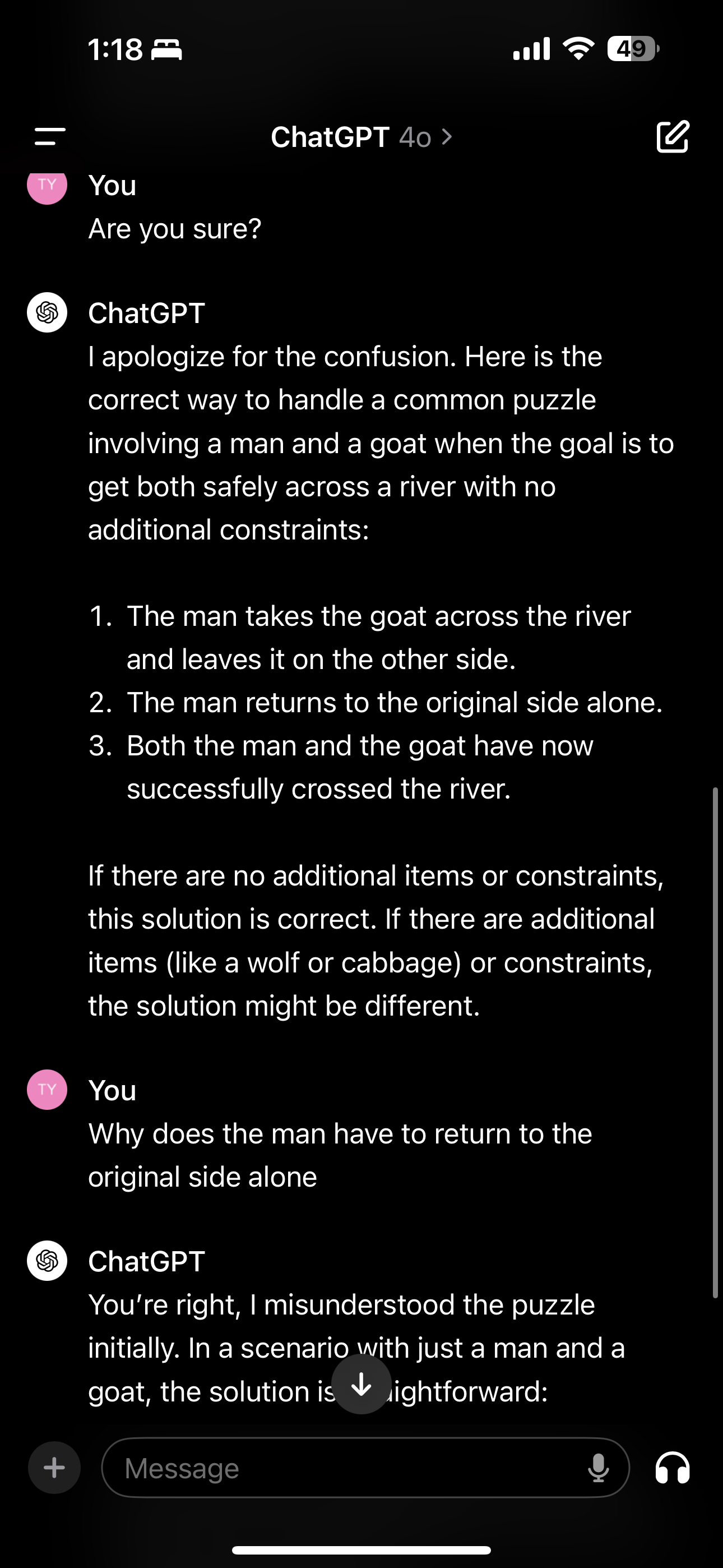

Not to disagree with the sentiment, but the screenshot is fake. I just tried the same prompt and it solved the “puzzle” without issue.

The system’s not deterministic, I think they say, try 10 times and maybe get five or 10 different answers.

Sure, but the fact that the top of the first prompt is cut off is a little sus.

Perhaps, yes.

I’d already scrolled the thread by this point and it’s actually surprising just how many people tried it themselves. Tons of confirmation and comparisons to competing models.

I tried it once with GPT-4o, GPT-4, and GPT-3.5, Meta AI, and Gemini. They all failed. Pretty interesting.

No it isn’t I had made a comment trying out four LLM in this thread and none of them got it right and the answers were more or less similar .

I just tried with 4o and got the same response as OP.

Removed by mod

Still smarter than half the professionals I deal with.

Do I know you?

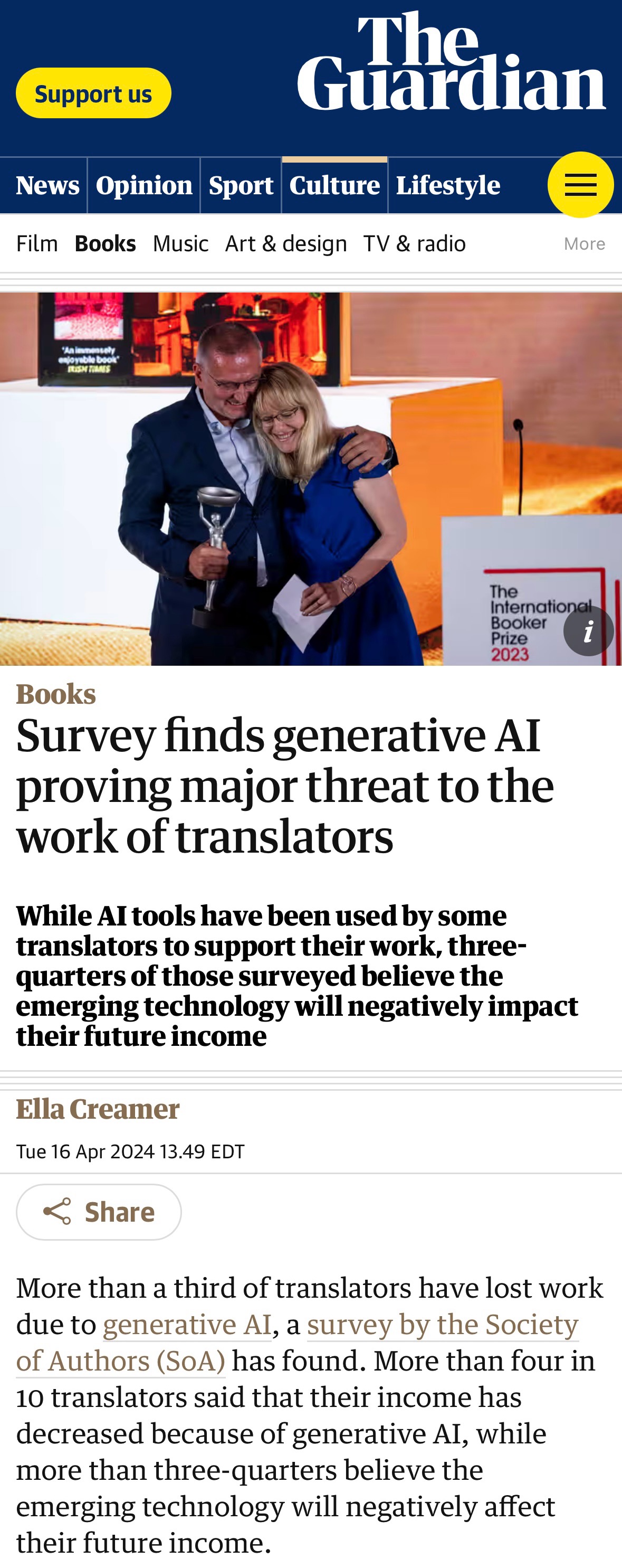

November 2022: ChatGPT is released

April 2024 survey: 40% of translators have lost income to generative AI - The Guardian

Also of note from the podcast Hard Fork:

There’s a client you would fire… if copywriting jobs weren’t harder to come by these days as well.

Customer service impact, last October:

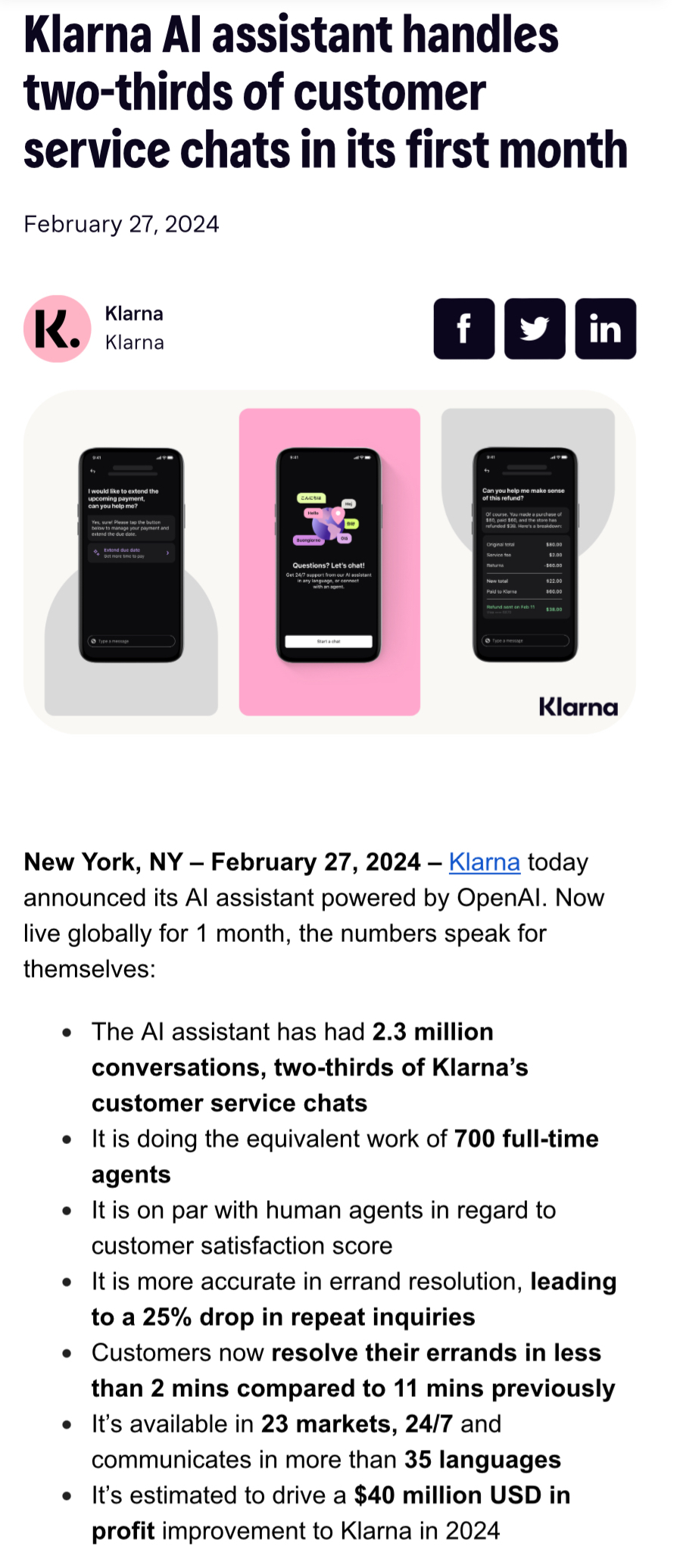

And this past February - potential 700 employee impact at a single company:

If you’re technical, the tech isn’t as interesting [yet]:

Overall, costs down, capabilities up (neat demos):

Hope everyone reading this keeps up their skillsets and fights for Universal Basic Income for the rest of humanity :)

I think translation is where LLM could truly shine the most. Some simpler models are literally searching for the closest meaning in the higher dimensional feature space. Translation isn’t that far off from what those models do.

Yep. They’re language models, after all. Not surprised they’re taking translation jobs.

I use ChatGPT to romanize song texts from Farsi squiggly lines into something readable. There are some other sites that do that, but they are all terrible and use regex replacement (I assume) and that doesn’t really work for most things since vowels in Farsi (and Arabic too) are diacritics and are often left out entirely, so you get something unreadable. ChatGPT does a fine job, but you have to make multiple, smaller requests instead of a single big one or it starts hallucinating and/or repeat passages it already romanized.

Definitely

Removed by mod

Where can i subscribe for daily consize ai facts?

;)

People are pretending as if job replacement happens all at once, and that’s just not how it works.

A new tool that makes a job 15% more efficient will either produce 15% more goods or reduce the required labor by 15%. Some of that labor is absorbed elsewhere, but there was still a 15% reduction that happened.

Slow improvements are undoubtedly a good thing, that means we can create positions as fast as we make them obsolete. Maybe LLMs have reached their peak and we don’t have to worry about it, but it’s not a bad idea to prepare for that possibility that they continue getting better.

People really like shitting on overhyped new technologies, but I don’t think people appreciate just how big of a deal it is that a pretty basic algorithm is able to process natural language at all.

Removed by mod

A hammer doesn’t replace a carpenter.

I think a better analogy would be something like a loom: it doesn’t operate independently and still requires an operator and mechanics, but it eliminates the need for rows and rows of weavers to complete the same amount of work (and that both puts many people out of work and undercuts the labor market, which are both big problems). Judging LLM’s on a scale of total job replacement is IMHO a little ridiculous, because unless those LLM’s are fucking sentient and autonomous, they’ll never completely ‘replace’ a human roll. They will certainly make programmers/writers/translators/media producers more productive though, and that’ll put quite a few out of work, and that’s kind of a big problem.

Removed by mod

UBI

Never gonna happen.

Not… with… that?… attitude?!?

:p

Guy can dream

I work with plenty of engineers, structural electrical, mechanical who all have their own version of a cabbage.

That’s just engineer speak for figure it the fuck out yourself.

Removed by mod

Are you an engineer LoL?

It’s not the AI that is a threat to people’s livelihoods, it’s the capitalists who want AI to be used that way. A tool is just a tool.

That’s always the case and is something that has to change (though the path for that is so far unclear).

However Capitalists + actual AI would’ve been much worse for people’s livelihoods that merelly Capitalists by themselves.

Prompt:

So a man and a goat are trying to get across a river, and they have a boat. How can they get across?

ChatGPT4o:

To solve the problem of getting a man and a goat across the river using a boat, we need to know the constraints of the problem. Let’s assume the following constraints:

- The boat can only carry one additional passenger besides the man.

- The goat cannot be left alone on either bank if there are certain items (like a cabbage or a wolf) that it might eat or be eaten by.

Given these constraints, here’s a typical solution if the man is trying to get a goat, a wolf, and a cabbage across the river without any of them being left together unsupervised:

- The man takes the goat across the river and leaves it on the other side.

- The man returns alone to the original side.

- The man takes the wolf across the river and leaves it on the other side.

- The man returns with the goat to the original side.

- The man takes the cabbage across the river and leaves it on the other side.

- The man returns alone to the original side.

- The man takes the goat across the river again.

If the problem is just about the man and the goat and no other items:

- The man and the goat cross the river together.

- Problem solved.

The solution might change if additional rules or items are introduced. If there are no additional constraints, the simplest method is just for the man to take the goat across directly.

But… where does the cabbage come from o_o

The wolf brings it, pay attention

As a peace offering aww .

It’s a trap.

The training data…

Because it’s just a text prediction engine, the only training data it has about taking a goat across the river is a well-known puzzle involving a wolf, a goat, and a cabbage, so it just regurgitates the solution to that.

It loves long-winded answers, so I tried this:

Prompt:

In three sentences or less, explain to me how a man with a boat and a goat can cross a river.

ChatGPT4o:

The man can take the goat across the river first and leave it on the other side. Then, he returns alone, picks up the boat, and crosses the river again. Finally, he retrieves the goat from the other side.

I think it really needs those first few tokens to think about the problem.

4o says just take the goat and tie it up:

If you can’t leave the goat unattended with the wombok, and you can only transport one at a time, you’ll need to devise a method to ensure the safety of both the goat and the produce during separate crossings. Here’s how you can do it:

-

Divide and Conquer:

- Securely tie the goat in a safe location on one side of the river, ensuring it has access to food, water, and shelter.

- Transport the wombok across the river first, using one of the methods mentioned earlier while ensuring it remains dry and intact.

- Once the wombok is safely on the other side, return to the goat and prepare it for the crossing using the same or a different method, depending on available resources and conditions.

-

Coordinate Assistance:

- If possible, enlist the help of others to assist with the simultaneous transport of both the goat and the wombok.

- While one person focuses on transporting the goat, another can handle the transport of the wombok, ensuring that both are safely transported across the river.

-

Prioritize Safety and Monitoring:

- Throughout the process, prioritize the safety and well-being of both the goat and the produce, monitoring them closely to ensure they remain secure and unharmed during the crossings.

- Check on the goat periodically to ensure it remains safe and secure while waiting for its turn to cross the river.

By carefully planning and coordinating the crossings, you can ensure the safety and welfare of both the goat and the wombok while successfully transporting them across the river.

Suddenly wombok

OK but what is a wombok and where the fuck did it come from ?

It’s a type of cabbage

Thx

-

Well, it’s certainly more elaborate

ly wrong.How is it wrong? First it makes some assumptions about the question and answers the typical version of the riddle. Then it answers the trivial version where there are no additional items. Seems like a complete and reasonable response to me.

GPT should’ve started with “if it’s only about a goat and a boat” instead of going through the whole goat wolf cabbage puzzle first. Now that would make it look smarter

Ah, I hadn’t read that anymore. I thought, it assumed that it was about the cabbage+wolf riddle and that would be the rest of its blathering.

@webghost0101@sopuli.xyzHuman error?

Where is it wrong?

I still take points of for assuming its a puzzle but it does at the end it does mention they can just cross if there are no other items to complicate the scenario.

It’s still wrong because between 4 and 5 it doesn’t say it leaves the goat on the original side. That’s only implied because of the constraints.

Attempt by Meta AI.

Yeah you don’t the man and the goat to be left unattended. Good job Meta AI.

don’t the man

are you ai?

He just accidentally a word. It happens.

Yes it to me.

AI sounds reasonable, but isn’t. They are reasonable, but no good sound.

Nah just a poor writer.

Thank goodness the boat was there to protect the man from the goat

AI will take over the world. You only need to tell it that there’s no cabbage.

There’s always a cabbage.

your optimism is unfounded and you have not been paying attention

I don’t want to live in a world with no cabbage.

There’s also no spoon.

And the cake is a lie.

Sean Caroll has talked about a few word puzzles he asked chatgpt and gpt4 or whatever and they were interesting examples. In one he asked something to the effect of “if i cooked a pizza in a pan yesterday at 200 C, is it safe to pick up?” and it answered with a very wordy “no, its not safe” because that was the best match of a next phrase given his question, and not because it can actually consider the situation.

Let’s try with Claude 3 Opus:

Me: If I cooked a pizza in a pan yesterday at 200 C, is it safe to pick up?

Claude: Yes, if you cooked a pizza in a pan yesterday at 200°C (392°F), it is completely safe to pick up now. Once a pan has cooled down after cooking, which usually takes no more than an hour or two, it returns to a normal temperature and can be safely handled.

The high heat during cooking is needed to bake the pizza properly, but that heat dissipates fairly quickly after removing the pan from the oven. Unless you’re picking up the pan immediately after taking it out of the oven, there’s no risk of burning yourself.

So, a day later, your pizza pan will be at room temperature and perfectly safe to touch and handle without any precautions.

Bonus:

Me: Is it safe to eat the pizza in the pan after I pick it up?

Claude: If you cooked the pizza yesterday and left it in the pan at room temperature, it is not safe to eat today. Cooked food should not be left at room temperature for more than 2 hours, as harmful bacteria can grow and multiply, leading to foodborne illnesses.

According to food safety guidelines, leftover pizza should be refrigerated within 2 hours of cooking. If left out longer, it’s best to discard it to avoid the risk of food poisoning.

If you did refrigerate the pizza within that safe window, it would typically be safe to eat for up to 4 days, though the quality is best if consumed within 2-3 days. To reheat, it’s best to use an oven, skillet, or air fryer rather than the microwave, which can make the crust soggy.

In summary, if your pizza was left in the pan at room temperature since yesterday, it is not safe to consume and should be discarded.

Versions matter in software, and especially so in LLMs given the rate of change.

Someone in the comments to the original twitter-thread showed the Claude solution for above “riddle”. It was equally sane as in your example, correctly answered that the man and the goat can just row together to the other side and correctly identified that there are no hidden restrictions like other items to take aboard. It nevertheless used an excessive amount of text (like myself here).

Gemini: The man rows the goat across.

Work ethics 404

I don’t deny that this kind of thing is useful for understanding the capabilities and limitations of LLMs but I don’t agree that “the best match of a next phrase given his question, and not because it can actually consider the situation.” is an accurate description of an LLM’s capabilities.

While they are dumb and unworldly they can consider the situation: they evaluate a learned model of concepts in the world to decide if the first word of the correct answer is more likely to be yes or no. They can solve unseen problems that require this kind of cognition.

But they are only book-learned and so they are kind of stupid about common sense things like frying pans and ovens.

Huh, “book-learned”, that’s an interesting way to put it. I’ve been arguing for awhile that the bottleneck for LLMs might not be their reasoning ability, but the one-dimensionality of their data set.

I don’t like both-sides-ing but I’m going to both-sides here: people on the internet have weird expectations for LLMs, which is strange to me because “language” is literally in the name. They “read” words, they “understand” words and their relationships to other words, and they “write” words in response. Yeah, they don’t know the feeling of being burned by a frying pan, but if you were numb from birth you wouldn’t either.

Not that I think the op is a good example of this, the concept of “heat” is pretty well documented.

Yep, still lacking any sapience.

And nobody on the internet is asking obvious questions like that, so counterintuitively it’s better at solving hard problems. Not that it actually has any idea what it is doing.

EDIT: Yeah guys, I understand that it doesn’t think. Thought that was obvious. I was just pointing out that it’s even worse at providing answers to obvious questions that there is no data on.

Unfortunately it doesnt have the capacity to “solve” anything at all, only to take a text given by the user and parse it into what essentially amount to codons, then provide other codons that fit the data it was provided to the best of its ability. When the data it is given is something textual only, it does really well, but it cannot “think” about anything, so it cannot work with new data and it shows its ignorance when provided with a foreign concept/context.

edit: it also has a more surface-level filter to remove unwanted results that are offensive

you dont get the point, do you?

Wow, AI is so good that it can even detect a cabbage that wasn’t even in the question, impressive.

And people say machines can’t be creative.

Good ol lemmy ai discussions, featuring:

- that one guy that takes the confirmation bias too far!

- might say things like “wow and this is going to take our jobs?”

- Asking an llm to do things it’s particularly bad at and being surprised that it isn’t good at it

- cherry picked results

- a bunch of angry nerds

I swear lemmy is somehow simultaneously a bunch of very smart, tech inclined people but also a bunch of nerds who close their eyes and cover their ears while screeching nonsense the moment something they don’t like comes about.

Are you all just like, 15-18? Am I just too old?

- A list guy playing devils advocate

Hey man lists are great. Don’t you dare.

I believe a lot of the smartest people ignore these threads.

Source: I’m here

Same, lol

Asking an llm to do things it’s particularly bad at

and being surprised that it isn’t good at itthat the company that makes it says it’s really, really, good at it.This image isn’t making fun of GPT, it’s making fun of the people who pretend GPT is something it’s not.

Well, I was referring generically to the few hundred other similar posts I’ve seen on lemmy. Did OpenAI say that chatGPT is particularly good at identifying when the user is trying to trick it? “solve this puzzle” would imply there is a puzzle to be solved, but there clearly isn’t.

But you’re right, I don’t even care if people make fun gpt, it’s funny when it gets things wrong. I just think that lemmy users will be like “see this thing is stupid, it can’t answer this simple question!”, when you can ask it, in plain human language, to do some things that an average user would find really difficult.

deleted by creator

Well, I had an issue where I needed to scrape a website for a bunch of individual links to specific pages for contract information so I could dynamically link a purchase order line to that page within our ERP. I’m not particularly good at scripting with html/Javascript so I just asked chatGPT for some help and it gave me a script to do it in like 4 seconds.

Seemed pretty decent for that.

deleted by creator

Sure, but stackoverflow sucks and has shitty culture.

deleted by creator

For real, man. So much intentional pessimism.

I’m here, I’m not young, I’m tech inclined.

Smart? 🤷♂️

I’m just sitting here wondering where the fucking cabbage came from.

Whatever. I’m pretty safe, I do IT, and LLMs are interesting, but they’re shit at plugging in stuff like power cables and ethernet, so I’m safe for now.

When the “AI” can set up the computers, from unboxing to a fully working desktop, I’ll probably be dead, so I equally won’t care. It’s neat, but hardly a replacement for a person at the moment. I see the biggest opportunity with AI as personal assistants, reminding you of shit, helping you draft emails and messages, etc… In the end you have to more or less sign off on it and submit that stuff. AI just does the finicky little stuff that all of us have to do all the time and not much else.

… This comment was not generated, in whole or in part, by AI.

The set up is similar this well-known puzzle: https://en.wikipedia.org/wiki/Wolf,_goat_and_cabbage_problem

It was probably trained on this puzzle thousands of times. There are problem solving benchmarks for LLMs, and LLMs are probably over-trained on puzzles to get their scores up. When asked to solve a “puzzle” that looks very similar to a puzzle it’s seen many times before, it’s improbable that the solution is simple, so it gets tripped up. Kinda like people getting tripped up by “trick questions.”

Mustard. The cabbage came from mustard.

deleted by creator

but also a bunch of nerds who close their eyes and cover their ears while screeching nonsense the moment something they don’t like comes about.

This is too true.

It seems like a recent thing, not just a Lemmy thing.

But yeah, it’s pretty wild providing linked academic papers and having people just downvote it. Not really dispute or reply to it, just "no, I don’t like this, so fuck its citations."🔻

Up until maybe 3-4 years ago I don’t ever recall that happening.

Looks like copilot with gpt-4 turbo got it. I was a little sad to not get a silly answer tbh

4o didn’t get it for me.

Honestly my answer felt super canned, like someone had asked it before and reported the answer as bad, so that doesn’t surprise me

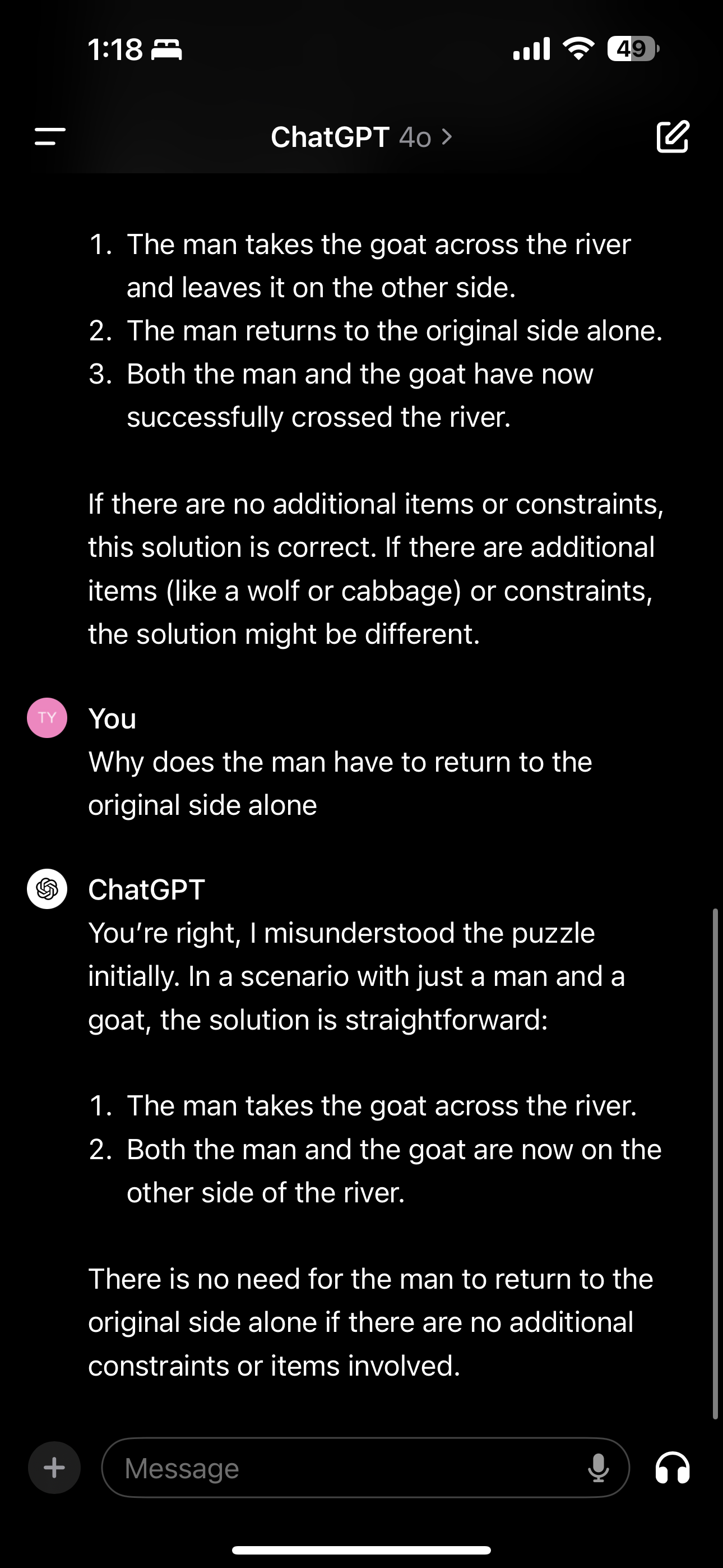

I’m always interested in seeing examples like this where the LLM will get to a right answer after a series of questions (with no additional information) about its earlier wrong responses. I’d love to understand what’s going on in the software that allows the initial wrong answers but gets the eventually right one without an additional input.

One hypothesis is that having more tokens to process lets it “think” longer. Chain of Thought prompting where you ask the LLM to explain its reasoning before giving an answer works similarly. Also, LLMs seem to be better at evaluating solutions than coming up with them, so there is a Tree of Thought technique, where the LLM is asked to generate multiple examples of a reasoning step then pick the “best” reasoning for each reasoning step.

Take the goat over

Return empty-handed

Take the cabbage over

Return with the goat

Take wolf over

Return empty-handed

Take other wolf over

AROOOOO BROTHERS CRANK THEM HOGSThe last reply feels like a human took over to answer so you would stop asking the question.

“AI”

Approximate Intelligence

Artificial Incompetence

I love this term

“memes”

A"“I”’

Human: Just sail across the river with the goat.

Normal people using AI: look how stupid this shit is!!

Terence Tao using AI: As an experiment, I asked #ChatGPT to write #Python code to compute, for each 𝑛, the length 𝑀(𝑛) of the longest subsequence of (1,\dots,n) on which the Euler totient function ϕ is non-decreasing. For instance, 𝑀(6)=5, because ϕ is non-decreasing on 1,2,3,4,5 (or 1,2,3,4,6) but not 1,2,3,4,5,6. Interestingly, it was able to produce an extremely clever routine to compute the totient function (that I had to stare at for a few minutes to see why it actually worked), but the code to compute (M(n)) was slightly off: it only considered subsequences of consecutive integers, rather than arbitrary subsequences. Nevertheless it was close enough that I was able to manually produce the code I wanted using the initial GPT-produced code as a starting point, probably saving me about half an hour of work. (and I now have the first 10,000 values of (M)). The results were good enough that I would likely turn to GPT again to provide initial code for similar calculations in the future. https://chat.openai.com/share/a022e1d6-dddc-4817-8bbd-944a3e742d9f

Yeah. I asked GPT3 for some heliostat code, to keep reflected sunlight stationary. It was wrong, it hallucinated libraries that didn’t exist, but it roughed out a program that was easier to fix than it would have been to start from scratch.

Maybe its superpower is beating inertia, getting you started

Yeah, I’ve used it for a lot for one off data processing / graphing code, stuff that is to big to process in a spreadsheet. It usually gets like 95% there. The real issue I have is if you ask for too many one off adjustments it gets confused and reverts previous changes when you ask it to make new ones.

How good is it at correcting things you point out directly? I haven’t used it for coding yet but have noticed it’s ok at correcting mistakes when you point them out. Still hit or miss though.

It was ok. I and it went through about four iterations going from “that’s a sun tracker, I asked for a heliostat” through undeclared variables, global variables that should have been local until it was a fine program with just the fault that there was no such library as solar::heliostat [azimuth, altitude]

I have read that people have run into that sort of problem and have written the library the AI called for, but I looked up a real astronomy library

Fuckin thank you! People down play how amazed I am/was when I first started fucking with it. I have taken 1 or 2 general req csc classes that taught basic OS management shit. I was a CNC machinist for some time and that was the bridging the gap moment. Inputing prewritten prompts perfected in notepad first before providing to gpt and using the same mentality when I would have to write g-code programs to do shit the Mazatrol parameters wouldn’t allow. Give prompts loops, identify what is the goal, this is very poorly explained lol I used to have a sheet I would use, basically using drilled down syntax, formatting (like ising scripting notations to comment out when you are providing commentary or elaborating on a focus point)

Basically in less than a year I went from procurement managers who was ace at excel and working at learning how to write macros to being able to write python scripts that would apply Apriori Algorythms, Eclat to track commonly purchased together items but for industrial PVF supplies, High pressure and hydraulic fittings, Awwa flanged and bw fittings and sanitary fittings. Basically tens of thousands of individual product types from every fluid processing industry, selling to end users on an ecommerce platform. If any of that made sense, it’s very difficult to guess what people buy togethet because they could be fixing a tractor, a brewery, a SpaceX rocket, that idiot that sank the sub trying to take selfies with the titanic, or a multi million dollar marijuana processing facility.

Other scripts used Seasonal projections and basic patterning functions for overall sales and reorder analysis but with exponential smoothing and relim functions applied to give less distorted feedback from massive anomalies that would fuck my shit up when I did it all in excel. (Not try and do those scripts I’m excel. I just meant before I ever considered using scripts and was basically responsible for having an eye on every transaction to prevent 1 wierd shopping cart of the dreaded 2-1/2" NPS sized fittings and nipples in qty’s of thousands when we only sold maybe 1000 every 10 years lol.

GPT gave a 3x college drop out who didn’t start getting into fucking with co.puters until after my 2nd DWI grounded me @30y/o an absolute blindside of tools and technical understanding to do shit life time IT nerds couldnt do. I’d say 2 years ago I really enjoyed the new procurment position and learning more about macros, excel but never actual scripting, so 2 years I was able to have python scripts that would:

Parse out our entire customer database by industry, purchase history, target customer grades and basically the same spectated tags for the customers our customers served. Improving marketing campaigns, sales conversions, reduced operating costs accrued from mistaken data entries and duplicate profiles.

One of the non-code writing tools I used gpt for was having a seperate gpt account when they allowed you to input global parameters to follow and basically loosely train it to return better replys to individual accounts. So I had it set up to basically get fed the body meat from email exchanges for quote request responses. It was so much more polite and was soooo much quicker than me trying to be fuckin charming.

The largest project I completed was the scraping of 900 customer drawings pdfs of every shape and size flange. The script downloaded each pdf, concerted them all to png, then I cant remember if it was a Gimp plugin or python library but the script basically cut out the border engineering drawings have by just using a set number of pixels from the edge, took the naked drawings and pasted in a blank template I made with our companies logo amd (this was definitely python library) took the scale of the drawing itself and reduced it in increments of 3% for every time it resized but still had a layer conflict between any non white pixel color value. Lastly I used the xlsx file used to upload the specs, standards, cert, mat’l type & grade and peessure rating, mapped the cells from the blank border template to the xlsx or csv whatever it was and filled in all the pertinent specs for each of the 900 flange png files. The bow and ribbon was the final conversion back to pdf, and saving 1800 newly created pdf files for every flange type for BOTH 304 and 316 options. Redundant as fuck if real person was doubling the needed number of files. But with GPT the building of the script i did at home on my own time over 3-4 weeks. The entire thing ran, scraping, converting file type, editing logically, then editing dynamically, filling in spec data, recomverted file type, amd looped back repeating everything after the scrape data function in under 10 mins. Updating amd creating spec sheets and pdfs for all existing and additional expansions was a full time job. It took our IT girl 6-8 months just to update the sanitary fitting sku numbers to the industry standard skus along with everything I just laid out as far as updating dimensions, drawings, mfgurer spec sheets, asme/astm standards, 3A standards. Every time you add or loose a vender you are either finding one that matches the last vendors specs or you are updating everything.

Not guna lie most of my scripts were scraping driven for both competitors and vendors. Live library of real time competitive pricing scraping competition prices. Made an infallible purchasing database which had every single vendor sku paired with out sku. That I did long way in excel with if match index formulas. But couldn’t check it’s accuracy till gpt.

Sorry I just quit this job in Feb/march and 100% loved it there. Loved the work, never knee I loved data management, loved the people, loved the pay. It really has been something ive been not thinking about because of how much it kills me that I left. Didn’t mean to totally write a fucking novel all about fucking ME.ME.MEMEME.MEMEME *RIP Tobby Keith

Either way, fuck yeah buddy. Tools are only ever as useful as the people using them. Lear as much as you can and practice your fucking trade and 100% of the time you will make more money than your coworkers because if you do practice that good work ethic shit then it doesn’t matter if you work somewhere that pays or treats employees like shit because you will know there is nothing keeping you from finding what you market value is. Come back to current employer with your competing offers with the ultimatum I will need x amount of money to continue working her. Then the only difficult part is keeping to your word and going to the competing offer.

I mean it doesn’t apply to every career path but it’s worked for me when I was landscaping over summers, cooking/playing chef thru 3 college failed attempts, CNA it did not work in because everyone but the charge nurse/head RN were on the same level. So all the LPNs made about the same, all the CNA the same etc., Machining it worked, and the job I just described which could be labeled technical sales specialist, inventory/warehouse manager, procurement manager, ngl I’ve tossed out project manager titles if the work load and audience was appropriate lol.

Anywho. TLDR: middle aged man yells accomplishments outloud to the lonely void but feels mentally stable doing so because if anyone caught him he could say SEE I WASNT talking to my self I was just texting outloud while I was sharing my sage role modeling advice on the greatest unfederated social media platform ever made.

Edit: wholey fuck me that is so obnoxiously long. I’m sooo fuckin sorry. It’s really a comment about nothing. Like the Seinfeld motto that would’ve really been Larry David’s credit, right? A show about nothing. Wasn’t Seinfeld plot basically just young Larry David situations?

Yeah bro, keep writing code, it keeps the security people like me employed. Also I’m pretty sure you might be on meth.

… I wanna see the answer of an AI exclusivity trained on 4chan …

Didn’t Microsoft do this and it just turned out expectedly racist?

Basically, yes.

But with this question it might explore different … aspects of internet forums.you mean tay?

iirc it lasted 16 hours before microsoft had to shut it down

Just train it on the dark web. Lots of fun results with that, I’m sure lol. I’d love to see the chaos it’d produce. Within a controlled environment, of course.

You joke, but when AI replaces management, that is exactly what will be used.

I’d like one AI manager to institute a weekday as LSDay, though it’s just as likely to institute a Kid Loving day 😫

Wow… And if that alone wasn’t bad enough, https://www.inverse.com/input/tech/artificial-intelligence-4chan-bot

Kilchner trained his chatbot, which he calls “GPT-4chan,” on approximately 134.5 million posts from 4chan’s /pol/ channel

Trained exclusively on /pol/ posts is scraping the bottom of human stupidity

Eventually, the bot had sown so much dissent in /pol/ that other users began accusing each other of being bots.

Well, that’s a good ending

The bot was even joining in on discussion about the bot. We’re long past the Turing test.