- cross-posted to:

- retrocomputing@lemmy.sdf.org

- cross-posted to:

- retrocomputing@lemmy.sdf.org

People now “ChatGPT isn’t real AI because it says dumb shit all the time”. People then: “Prolog is AI because it can solve logic problems”.

Something with moving goalposts or something

They are both different parts of the same problem. Prolog can solve logical problems using symbolism. ChatGPT cannot solve logical problems, but it can approximate human language to an astonishing degree. If we ever create an AI, or what we now call an AGI, it will include elements of both these approaches.

In “Computing Machinery and Intelligence”, Turing made some really interesting observations about AI (“thinking machines” and “learning machines” as they were called then). It demonstrates stunning foresight:

An important feature of a learning machine is that its teacher will often be very largely ignorant of quite what is going on inside… This is in clear contrast with normal procedure when using a machine to do computations: one’s object is then to have a clear mental picture of the state of the machine at each moment in the computation. This object can only be achieved with a struggle.

Intelligent behaviour presumably consists in a departure from the completely disciplined behaviour involved in computation, but a rather slight one, which does not give rise to random behaviour, or to pointless repetitive loops.

You can view ChatGPT and Prolog as two ends of the spectrum Turing is describing here. Prolog is “thinking rationally”: It is predictable, logical. ChatGPT is “acting humanly”: It is an unpredictable, “undisciplined” model but does exhibit very human-like behaviours. We are “quite ignoerant of what is going on inside”. Neither approach is enough to achieve AGI, but they are such fundamentally different approaches that it is difficult to conceive of them working together except by some intermediary like Subsumption Architecture.

This is what I expect too. And hope - LLMs are way too unpredictable to control important things on their own.

I often say LLMs are doing for natural language what early computation did for mathematics. There’s still plenty of mathy jobs computers can’t do, but the really repetitive ones are gone and somewhat forgotten - nobody thinks of “computer” as a title.

yeah, they’re really in the wrong to think that we’d have some technical advancement within the last 40 years and we should expect more than a probabilistic text generator. 🙃

Like this?

I know how ML works, my comment was a persiflage on over-simplifying the topic of AI and logic. I originally marked it with an

/sto indicate sarcasm, but I think this gets lost with newer generations, so now I replaced the/swith the upside down emoji (🙃) which also seems to indicate sarcasm.Is /s way older than I thought it was?

No need to

s/\/s/🙃/gon my account… but the comment is ambiguous either way, and I think that video is pretty decent, so… 🤹

Same old story: anything a computer can do, is an “algorithm”; anything it can not yet do, is “AI”… 🙄

if you listen to marketing of companies using Machine Learning, AI can do everything right now.

That is correct, AI has always been able to do everything “right now in the future”. ML, NNs, GPT, etc. are all terms to distinguish the actual algorithms, from the abstract future goal of “AI”.

The literal first AI was an analog computer that the guy gave feedback to images on. If it’s a circle or a square, if it guesses right or wrong.

It’s literally the same training that we have used for models ever since and currently, and there are people trying to say Generative Imaging isn’t AI.

Y’all. It’s the exact thing AI was created in mind for.

Correct. When people say “ChatGPT isn’t real AI” they mean it’s not AGI (Artificial General Intelligence). The term “Artificial Intelligence” has been the proper term for the study of machine learning since the 1956 Dartmouth Workshop.

It’s all AI, from the computer player in Battlechess to ChatGPT. It’s not all using the same techniques, or have the same capabilities.

I don’t think your characterisation of the Dartmouth Project and machine learning are quite correct. It was extremely broad and covered numerous avenues of research, it was not solely related to machine learning though that was certainly prominent.

The thing that bothers me is how reductive these recent narratives around AI can be. AI is a huge field including actionism, symbolism, and connectionism. So many people today think that neural nets are AI (“the proper term for the study of machine learning”), but neural nets are connectionism, ie just one of the three major fields of AI.

Anyway, the debate as to whether “AI” exists today or not is endless. But I don’t agree with you. The term AGI has only come along recently, and is used to move the goalposts. What we originally meant by AI has always been an aspirational goal and one that we have not reached yet (and might never reach). Dartmouth categorised AI into various problems and hoped to make progress toward solving those problems, but as far as I’m aware did not expect to actually produce “an AI” as such.

an analog computer that the guy gave feedback to images on. If it’s a circle or a square, if it guesses right or wrong

That reminds me of the Square Hole Meme.

That is my thought as well. We’ll continuously change the definition of intelligence in order to preserve the notion that intelligence is inherently human. Until we can’t.

🤖 I’m a bot that provides automatic summaries for articles:

Click here to see the summary

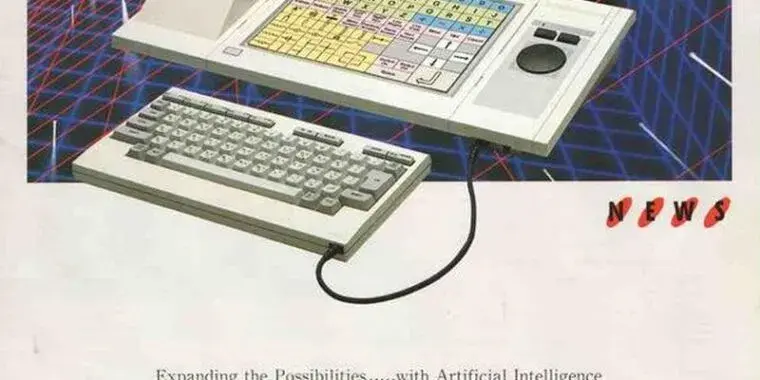

After all, the usually obsessive documentation over at Sega Retro includes only the barest stub of an information page for the quixotic, education-focused 1986 hardware.

The site’s recently posted deep dive on the Sega AI Computer includes an incredible amount of well-documented information on this historical oddity, including ROMs for dozens of previously unpreserved pieces of software that can now be partially run on MAME.

Despite the Japan-only release, the Sega AI Computer’s casing includes an English-language message stressing its support for the AI-focused Prolog language and a promise that it will “bring you into the world of artificial intelligence.”

Indeed, a 1986 article in Electronics magazine (preserved by SMS Power) describes what sounds like a kind of simple and wholesome early progenitor of today’s world of generative AI creations:

Still, it’s notable how much effort the community has put in to fill a formerly black hole in our understanding of this corner of Sega history.

SMS Power’s write-up of its findings is well worth a full look, as is the site’s massive Google Drive, which is filled with documentation, screenshots, photos, contemporaneous articles and ads, and much more.

Saved 51% of original text.