If nearly half of traffic is bots, at least 40% must be npm install

FYI 90% of those bots are scrapers and natural automation tools which are harmless.

Yeah, and bots are software setup and configured by humans to do things for humans. It’s still kind of humans using the internet, just not actively at the keyboard.

This is how we end up needing the blackwall

Instead of murderous AI it just covers every connected screen in adverts for raid shadow legends.

But is the Internet dying? The thing it doesn’t say is if the human participation is dwindling.

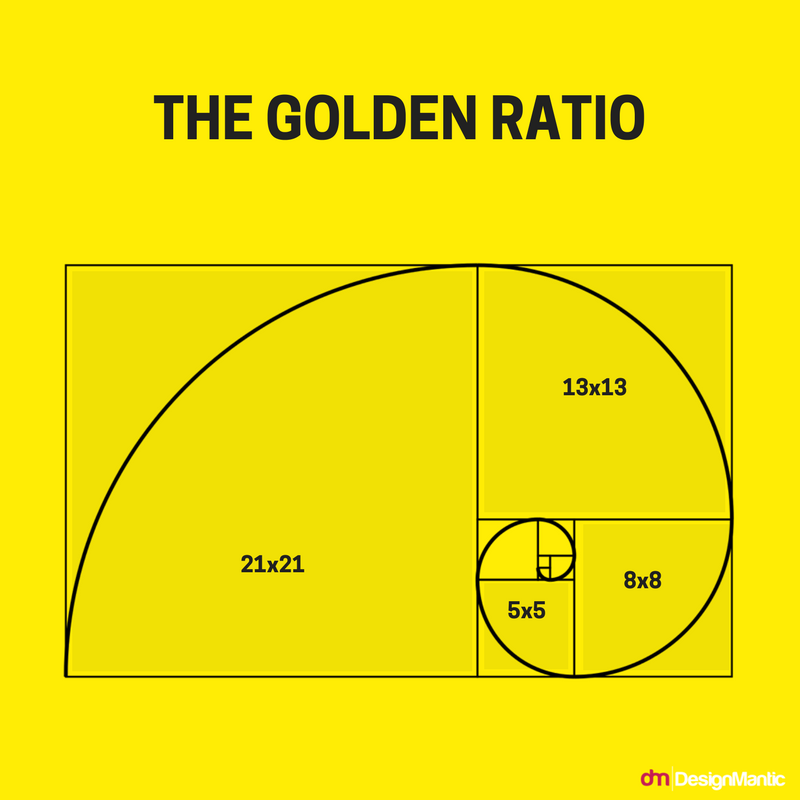

To keep it simple, I’ll work with small numbers. Imagine there are 10 humans online. Now imagine 1 bot on online. Bots are 9% (1 in 11) of this imaginary online community. A year later, those same 10 humans are still online, but there are now 10 bots online; the bots are 50% of the community. This statistic can lead you to think there is less human participation when nothing happened to the humans. The difference is the raw number of bots. This is what I believe is happening, about the same number of humans, just an increasing number of bots, scraping, posting, etc.

X/Twitter is dying because of mismanagement.

Let’s extend this thought experiment a little. Consider just forum posts; the numbers will be somewhat similar for articles and other writings, as well as photos and videos.

A bot creates how many more posts than a human? Being (ridiculously) conservative, we’ll say 10x more.

On day one: 10 humans are posting (for simplicity’s sake) 10 times a day, totaling 100 posts. Bot is posting 100 a day. For a total of 200 human and bot posts; 50% of which are the bot.

In your (extended) example, at the end of a year: 10 humans are still posting 100 times a day. The 10 bots are posting a total of 1000 times a day. Bots are at 90%, humans 10%.

This statistic can lead you to think human participation in the Internet is difficult to find.

Returning to reality, consider how inhuman AI bots are, with each probably able to outpost humans by millions or billions of times under millions of aliases each. If you find search engines, articles, forums, reviews, and such are bonkers now, just wait a few years. Predicting general chaotic nonsense for the Internet is a rational conclusion, with very few islands of humanity. Unless bots are stopped.

Right now though, bots are increasing.

Bots are increasing. But the Internet is not dead/dying, just changing. Many of the “The 10 bots are posting a total of 1000 times a day.” are repost bots merely parroting human generated content.

I wonder, though, if this will cause the scrapers to be impacted by the reposters or other AI generated content.

You’re taking the word “dying” too literally

So change means “dying”? So every time a tadpole evolves into a frog, a tadpole dies? Should we have protest signs that read, “FROGS KILL TADPOLES! DOWN WITH FROGS”?

No, that is not what I was talking about. In the current environment it is completely possible for some people to only have interactions with bots online, without even knowing. This may get worse and worse in the future. THAT is what “dead” internet implies, lifeless online interactions essentially.

I suspect there will still be online interactions with humans, just more interactions with bots. Unfortunately, it’s we humans behind the mess. Even if we pass laws to stop it (or even forced labels of “I’m a bot” on bot accounts), some people won’t play by the rules. So the change is going to happen. We can try to persuade the public, but we know how well that works:

So what do you propose be done about it?

But what if I wanted to communicate with humans instead of propaganda-bots? Then yes, that Internet is dead, and there’s no real fucking reason to be on most of those sites.

I’m sure it’ll be fine… oh, totally unrelated: has any one been on Usenet lately?

Most Usenet discussion groups don’t even get spambot posts these days.

Really? How do people get greencards nowadays then?

There are chat groups with usenet? How does that work? I have only ever seen it used for downloading stuff.

Usenet started out as a forum-like system, with individual messages grouped into discussion threads (the protocol worked kind of like email, with messages indicating which other message they replied to, so that client software could build a tree for each group). That side of it was eventually killed off by lack of good moderation options or support for embedded media.

Eh, most of those will just be scrapers, and fediverse inter-server communication is technically a bot.

It’s a bit like Kessler Syndrome. The more bots on the net the more crap we have to filter through, until eventually we can’t use it because there’s too much crap.

I welcome my human counterparts

This is the Dark Forest theory of the internet.

Do you have a written summary of that? I hate watching videos, and the dark forest book was fun but without a clue I don’t understand the change to internet

With proliferation of AI generated content, people aren’t able to identify other human generated content, or be certain that their online interactions are with a bot or human. This scenario has apparently been called the dark forest internet because people will try to preserve their communities by more restrictive curating, effectively hiding both from other humans and bots.

I like some of Kyle’s videos, and I’m not doing a great job of summarizing all of the points made in this one. I found it worth the 15ish minutes, but probably should have watched it at higher speed.

iirc YouTube had transcripts?

Here is an alternative Piped link(s):

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

FULLY AUTOMATED

FULL BRIDGE RECTUM FIRE

can’t read this article, can some explain what their definition of bot is?

thanks. 32% of malicious traffic is still a lot. the 50% increase in bad traffic in gaming is interesting though.

Bots don’t run themselves, they are run by humans. I have a lot of them myself

I am somewhat of a not myself

A bot browsing or posting is not equivalent to a human doing so.