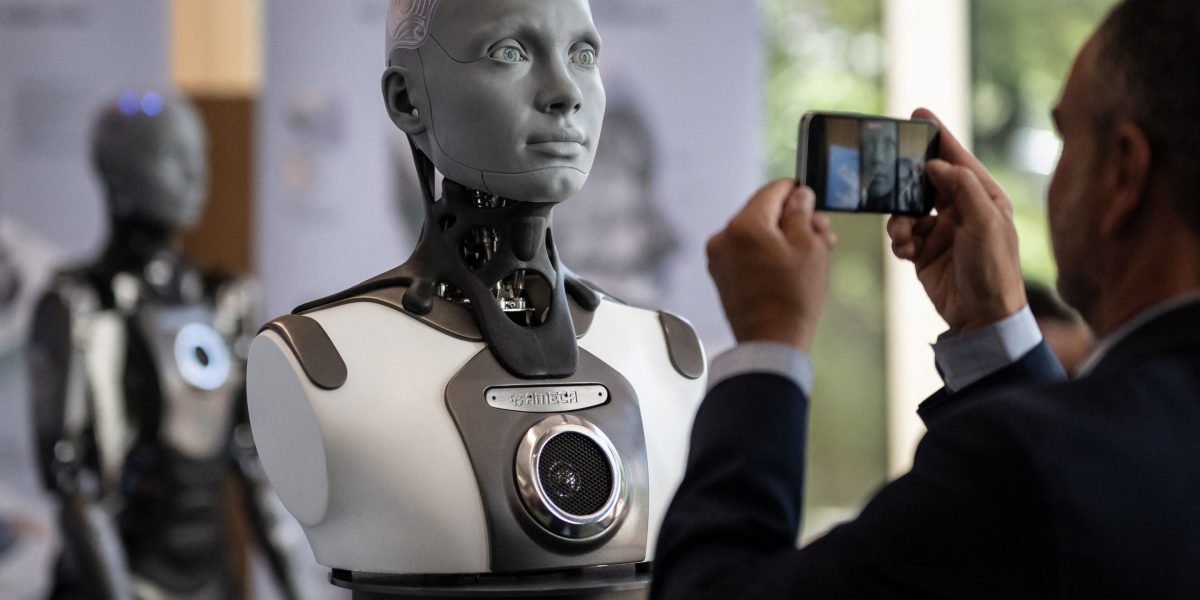

In an open letter published on Tuesday, more than 1,370 signatories—including business founders, CEOs and academics from various institutions including the University of Oxford—said they wanted to “counter ‘A.I. doom.’”

“A.I. is not an existential threat to humanity; it will be a transformative force for good if we get critical decisions about its development and use right,” they insisted.

It honestly makes my blood boil when I read “AI will destroy everything” or “kill everyone” or similar. No, its not going to happen. We are nowhere close to that, we never will unintentionally or intentionally create unkillable beings like Terminator just because there is actually no material to build it with to make it par.

Big tech wanting to freeze AI just want to control it themself. Full speed ahead!

@SSUPII @throws_lemy @technology concur.

Skynet is a red herring.

The real issue is that #AI is putting more stress on long-standing problems we haven’t solved well. Good opportunity to think carefully about how we want to distribute the costs and benefits of knowledge work in our society.

👉🏼“AI stole my book/art” is not that different from “show me in the search results, but only enough that people click through to my page”

👉🏼 “AI is taking all the jobs” is not that different from “you outsourced all the jobs overseas”

👉🏼 “the AI lied to me!” is not that different from “that twitter handle lied about me!”

The main difference is scale, speed and cost. Things continue to speed up, social norms and regulations fall behind faster. #ai

@SSUPII @throws_lemy @technology

AI scraping and stealing people’s art is literally nothing like a search engine.

Maybe that would hold up if the original artist was paid and credited/linked to, but right now there is literally zero upside to having your artwork stolen by big tech.

@donuts would you please share your thinking?

I certainly agree that you can see the current wave of Generative AI development as “scraping and stealing people’s art.” But it’s not clear to me why crawling the web and publishing the work as a model is more problematic than publishing crawl results through a search engine.

@throws_lemy @SSUPII @technology

Search engines are rightly considered fair use because they provide a mutual benefit to both the people who are looking for “content” and the people who create that same “content”. They help people find stuff, which basically is good for everyone.

On the other hand, artists derive zero benefit from having their art scraped by big tech companies. They aren’t paid licensing fees (they should be), they aren’t credited (they should be), and their original content is not visible or being advertised in any way. To me, it’s simply exploitation right now, and I hope that things can change in the future so that it can benefit everyone, artists included.

@donuts

For example, image search has been contentious for very similar reasons.

@throws_lemy @SSUPII @technology

@donuts

I certainly think that a Generative AI model is a more significant harm to the artist, because it impacts future, novel work in addition to already-published work.

However in both cases the key issue is a lack of clear & enforceable licensing on the published image. We retreat to asking “is this fair use?” and watching for new Library of Congress guidance. We should do better.

@throws_lemy @SSUPII @technology

I don’t think it’s naive to think that there is an existential risk from AI. Yes, LLMs are not sentient and I don’t think ChatGPT is Skynet, but it seems pretty obvious to me that if we could create an entity that is generally intelligent, has goals, and is orders of magnitude smarter than humans that is cannot be controlled. And that poses a risk if its interests come into conflict with ours

Global mega-corps are already a kind of AI. They’re generally intelligent actors that find creative solutions to problems and work to advance their own interests, often at the expense of humanity, despite attempts to reign them in. A true AGI might be like a super-powered corporation, that can have it’s big decision meetings every second instead of once a week

However, I am also concerned with global regulation of this stuff because if state actors are the only ones allowed to have AI, that could get dystopian very quickly as well so… 🤷♂️

It makes my blood boil when people dismiss the risks of ASI without any notable counterargument. Do you honestly think something a billion times smarter than a human would struggle to kill us all if it decided it wanted to? Why would it need a terminator to do it? A virus would be far easier. And who’s to say how quickly AI will advance now that AI is directly assisting progress? How can you possibly have any certainty on any timelines or risks at all?

Put down the crack, there is a huge ass leap between general intelligence and the LLM of the week.

Next you’re going to tell me cleverbot is going to launch nukes. We are still incredibly far from general intelligence ai.

I never said how long I expected it to take, how do you know we even disagree there? But like, is 50 years a long time to you? Personally anything less than 100 would be insanely quick. The key point is I don’t have a high certainty on my estimates. Sure, might be perfectly reasonable it takes more than 50, but what chance is there it’s even quicker? 0.01%? To me that’s a scary high number! Would you be happy having someone roll a dice with a 1 in 10000 chance of killing everyone? How low is enough?

I’ll be dead.

The odds are higher that Russia nukes your living area.

Well I wont be, and just because one thing might be higher probability than another, doesn’t mean it’s the only thing worth worrying about.

The likelihood of an orca coming out of your asshole is just as likely as a meteor coming from space and blowing up your home.

Both could happen, but are you going to shape your life around whether or not they occur?

Your concern of AI should absolutely be pitted towards the governments and people using it. AI, especially in its current form, will not be doing a human culling.

Humans do that well enough already.

And how can you be certain, if the virus generation its an actual possibility, there won’t be another AI already made to combat such viruses?

I mean, even that hypothetical scenario is very scary, though lol

Sure, I’m not certain at all, maybe, but are you certain enough to bet your life on it?