Removed by mod

If you glitch outside of the stadium, you can actually load in GTA and Cyberpunk 2077 depending on which direction you go.

Instructions unclear, glitched my dick into the backrooms.

Just keep glitching, you’ve got potential.

Now i’m QPU misaligned…

Mario 64 File Select plays

There is no reason for cod to be that large either

Less people have the opportunity to pirate it with the fuckhuge download and install

The biggest obstacle to piracy isn’t drm. It’s ridiculous system requirements.

There is one simple reason: the bigger the game, the fewer other games you can install. So why spend time optimising size on disk, if it will just cut into the live service profits of your game?

Honestly, I’d not considered this angle before, but I would not be surprised at all if a product manager at Activision has had this thought.

I don’t think that’s a reason they use. If anything, I’d expect live servicr games to benefit from the game being smaller, because they want players to not uninstall it when they’re feeling finished.

But “why bother” certainly is. There’s not that many players who will not buy your game just because it’s big. Most games are focused on the initial sale. Optimizing for size is expensive. Why spend thousands of dollars of expensive software dev time while making the game more complex to test for something that won’t affect many sales? Especially compared to fixing bugs or adding more noticeable features. Software dev is always a matter of tradeoffs. Unless you’re making something like a mars probe, there will always be bugs. Always always always. How many people will complain about a bug (which unexpectedly turned out to be game breaking in some niche case you didn’t think about)?

I don’t think that’s a reason they use. If anything, I’d expect live servicr games to benefit from the game being smaller, because they want players to not uninstall it when they’re feeling finished.

The smaller the game, the more likely you are to uninstall when you’re done, because it’s easier to re-install (and you don’t need to free up lots of space). Large size means you’ll keep it installed so you don’t miss the next content drop. Keeping it installed means you’re more likely to play, because you have less choice.

It’s a combination of sunk cost and FOMO.

It cuts both ways. If you’re low on disk space, you can uninstall 10 small games or just one large game.

Though personally, I’ve been happy after I threw in a larger SATA SSD and now I can move installs from my faster NVME drives to the slower one when I want more fast space. I generally ignore the games smaller than 5gb when trying to find more space.

I recently bought the 2021 remaster of a strategy game from 2004 and it was almost 50 GB. Add another 12 GB if for some reason you want the 4k textures on models from 2004. This game used to come on a CD.

4k and 8k textures are huge. Upscaling the textures takes a lot of extra space surprisingly. That’s usually the single biggest thing bloating a games size.

The crazy thing is that a lot of today’s models are actually much more simple than they used to be. Look at comparisons for old games like Mario Galaxy versus Mario Odyssey for good examples. But this is done because it allows for more detailed environments; The lack of polygons means that (as long as the textures can be loaded fast enough) your rendering goes much faster. You’re not being bottlenecked by polygon counts anymore, because there are fewer polygons.

But this has caused texture sizes to balloon, because now you’re shifting nearly all the heavy lifting over to those textures instead of the polygons.

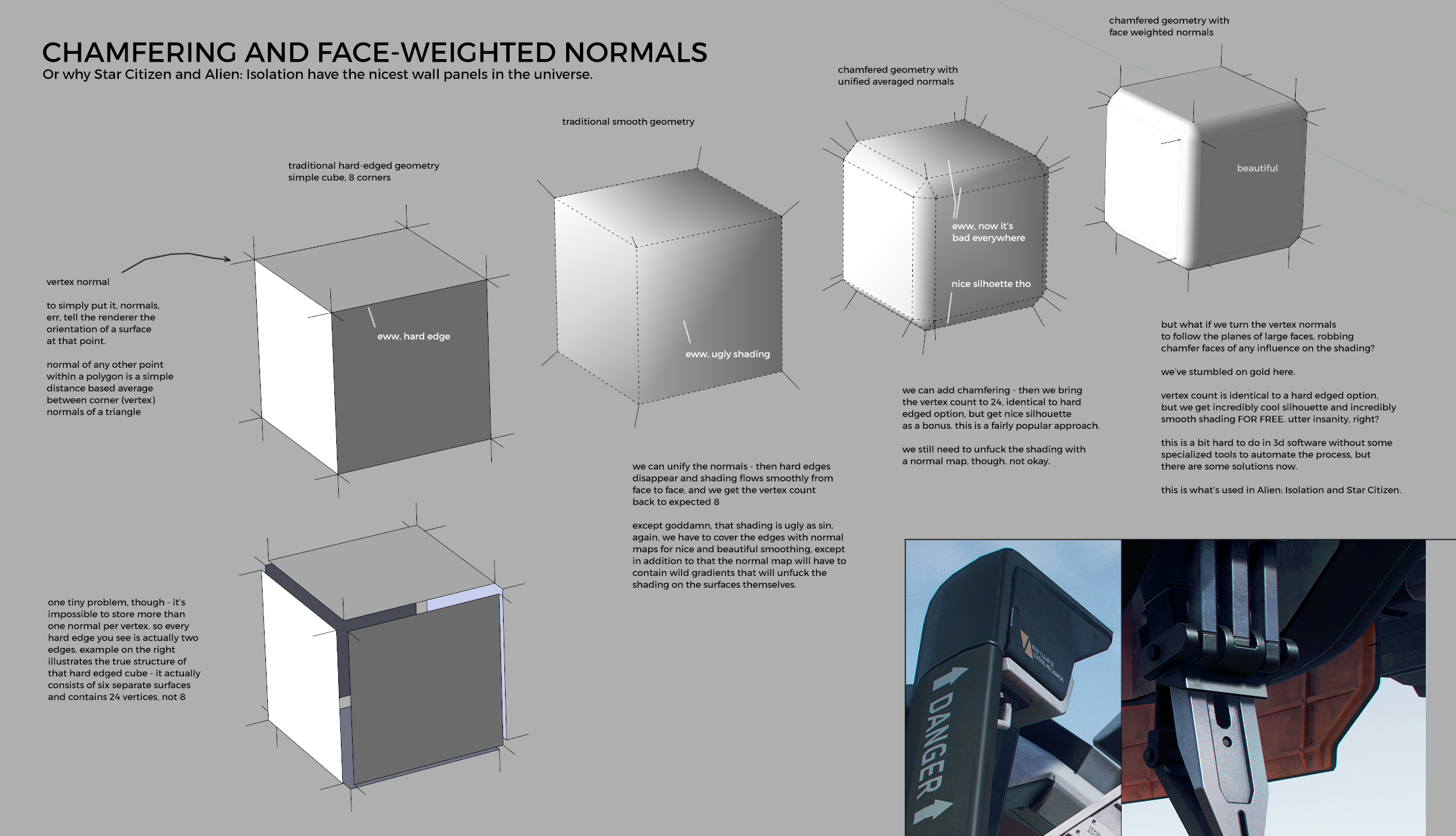

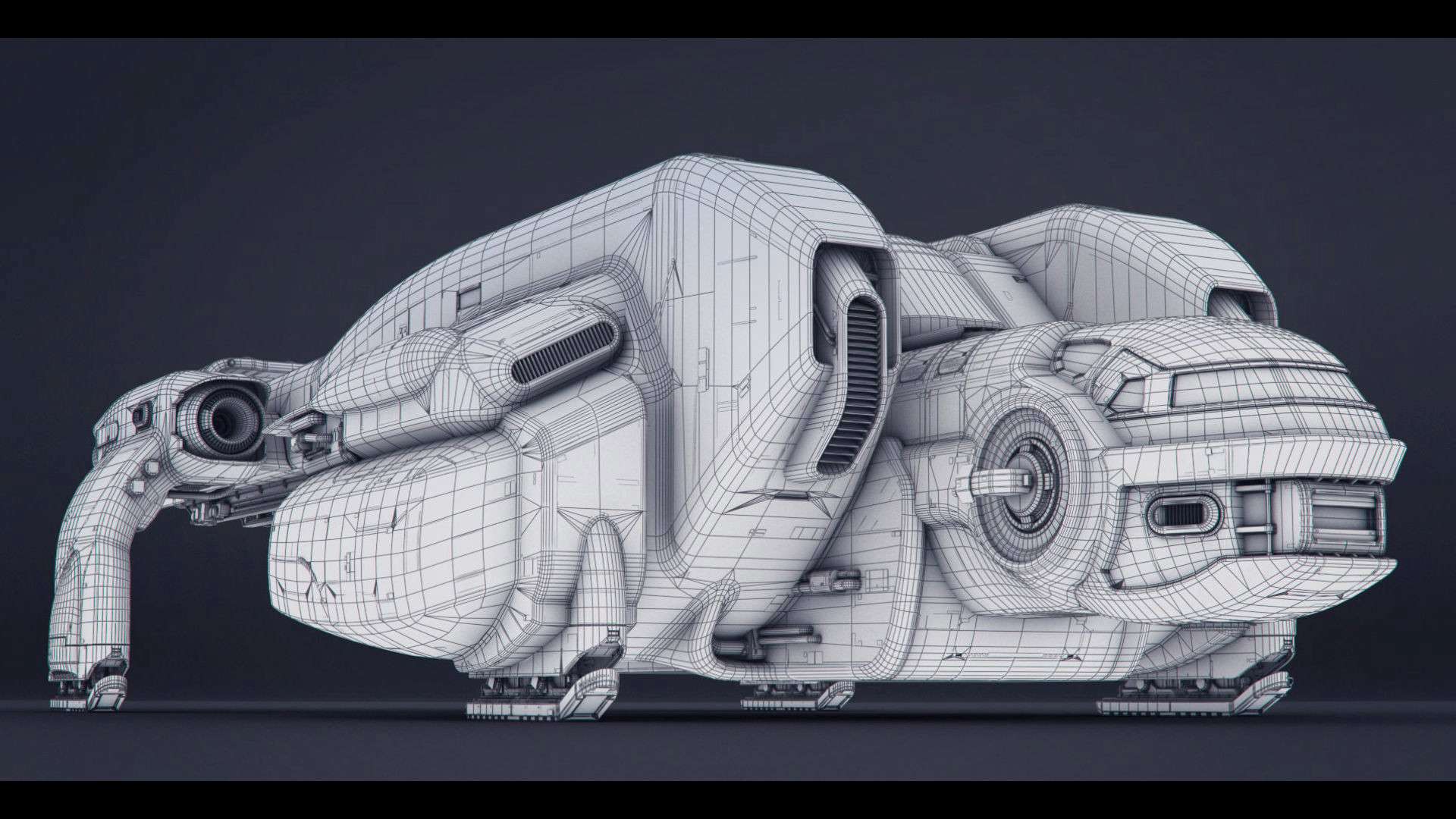

For more graphically intensive games actually, this is shifting back bc polys are a lot cheaper than texel density these days. Games like Star Citizen, Alien Isolation, Cyberpunk and Starfield (or other hardsurface elements in games like firearms or vehicles) with insanely intricate hardsurface elements that the player needs to be able to be up close all around and inside can’t use baked normals due to the fact it’d just be impossible to get a decent texel density and bake, so now games are shifting to something called ‘face weighted normals’ which basically means that all the big bevels and chamfers are actually part of the model’s geo instead of being baked down. Smaller stuff like greebling will be a flat plane shrink wrapped to the curvature of a surface with baked detail as a displacement or normal map.

Thanks for the link, that’s actually really dope.

I love seeing optimization tricks like this.

To be clear, the 4k textures are an optional free extra download that bumps the install size up significantly above the ~50GB. The 1024x1024 textures that come with it as standard will still have taken up a good chunk extra over 2004’s version though, of course

I’ve dug around in the files a little bit for modding purposes and as far as I can tell, one of the biggest reasons for bloat is the way that they implemented an ethnicity system in the remaster. In the original, units just had a skin colour that was roughly appropriate for the historical home region of the faction they’re from. In the remaster, they added a system that gives the units some variety in appearance that takes in to account where the unit was recruited; if you recruit soldiers in England, they’ll be paler on average than the ones recruited in Algeria. However, it seems like the way this was implemented was to give every single unit a unique texture for every ethnicity. There’s no common set of, say, five-ten faces for southern European men, for example. Every single unit has one southern European face, one northern European face, one north African face etc etc. Same goes for the visible skin on the rest of the body. Multiply that across about a thousand different units and suddenly you’ve got an utterly absurd number of nearly identical textures. And since this a strategy game, players are basically never looking at any of them at a level of zoom that affords each unit more than a few dozen pixels.

The secret? SSDs.

There’s no need to be smart or clever about compression or implementation, since all files can be called immediately from the hard drive.

No need to create iterative processes now since you can just brute force the entire production. It is technologically superior, it creates superior performance, yet has the drawback of huge file sizes.

Speed is not the appropriate answer to bloat.

There’s still very much a need to have a brain when it comes to storing data, it’ll take far longer to upload, download, load up the game and load scenes and not everyone has an SSD, heck even if they do everyone can benefit from smaller games. Also if you do it properly it will have significantly better performance as less is being loaded from the disk, memory and vram.

I think people also have an odd idea of what it takes to photorealistically depict a fucking stadium full of people and players with realtime reflections and sweating normal maps. The texture info alone is gonna be huge, but likewise there are tons of high res models animating in realtime all over the screen. So yeah, this being a gigantic install makes sense if 4k res gaming is what people want to play.

Or uncompressed audio in multiple languages/different soundtrack variants (original/remaster)

Audio is also huge for most games. Especially if the game has a lot of dialogue and offers several languages. AAA, dialogue heavy games can have hundreds of hours of audio for a single language.

They should really be setting that up in a way that users select their language and only download the files related to the selected language. I don’t even need the other official language for my country, let alone dozens of other languages I don’t speak.

Yeah, I feel like the improvements in gaming hardware have gone 50% to improving dynamic systems and graphics, and 50% to messy project bloat. Not to mention the constant crunch culture means they never have time to clean things up before launch, and after launch is for game breaking bugs and DLC.

I mean I can understand how textures add up but 161 gigs for a basketball game seems like a lot of unoptimized stuff going on

The majority of the space on modern games is taken up by high definition audio. Those thousands of commentator lines add up to dozens of gigs alone.

Idk if NBA games have a lot of cut scenes but those are sometimes the culprit; Separate rendered videos in every language that the game supports.

So they’re likely not optimizing by not using Opus.

Madden is only like 50 gigs I think

Do they not even use compression anymore? I’ve been wondering since Steam came out if I was downloading compressed game files or wasting bandwidth every time. They used to try to conserve storage, compressing everything to fit on one or two discs (or floppy disks before that)

If you watch the download graph, the blue bars is what you’re downloading, and is always significantly smaller than the green line which is what is being saved to disk.

“Japanese game dev has been here”

“How can you tell?”

“30fps cap”

“Is Bethesda a Japanese word?”

Image Transcription:

A version of the White Man Has Been Here meme showing a Robert Griffing painting of two Native American trackers examining footprints in the snow. One is standing and the other squatting, both are holding rifles. The squatting tracker is saying “western game dev has been here.”

His standing companion asks “How can you tell?”

The first tracker replies, “161 GB for a basketball game”.

The footprints in the snow have been replaced by a screen shot of an X/Twitter notification by Saved You A Click Video Games replying to a post by GameSpot reading “NBA 2K24 File Size Revealed, And It’s Even Bigger Than Starfield - Report dlvr.it/Svvggh2” accompanied by an image of a basketball player for the Lakers team. The Saved You A Click Video Games’ response reads “It’s 161GB on Xbox Series X|S.”

[I am a human, if I’ve made a mistake please let me know. Please consider providing alt-text for ease of use. Thank you. 💜 We have a community! If you wish for us to transcribe something, want to help improve ease of use here on Lemmy, or just want to hang out with us, join us at !lemmy_scribes@lemmy.world!]

I remember being floored to see that GTA V on my PS3 was a 8gb disc. Zero optimization for consoles these days.

When Baldur’s Gate 2 came out it was on 6 CDs, like 4GB. Game was massive.

UT2k3 was 7 discs iirc.

Funny UT2k4 was only 6. Better compression maybe?

That’s so wild, I had a pirated copy that was a single disc install. How’s that work?

DVD ISO?

Must’ve been. Why didn’t they do commercial releases that way, are DVDs that expensive?

At the time we all had CD-ROMs and DVD-ROMs were expensive. It’d be a few more years til PC games started to be shipped on DVD and Steam was in it’s baby stage at the time. My copy of San Andreas was a DVD-ROM, but pretty much every other game I bought was CD until Civ 5 which did come on DVD… and a steam code which was required this making the DVD useless haha.

I remember Hexen was on eight damned floppies. What a pain.

Wait, what ?

Meanwhile it’s 98 GB on PC.

Is this forreal

It’s because they just Ctrl+X last year’s game directory but didn’t put it back in a .ZIP file.

I remember when even having a 160gb hard drive was unthinkable.

The first computer I ever used didn’t have a hard drive (Macintosh 128k). The first hand me down computer I had in my room was a 286 with a 500mb HDD.

I vaguely remember that one evening my father drilled into me that if I get a dialogue box with the text [Disk Read Error, (Abort) (Retry) (Initialize)?] That Initialize was never the correct option. Apparently I deleted one of his projects by mistake. I was 5 or 6 at the time

I think my first harddrive was smaller than that, and we had to compress it to get double the space. However, everything ran 4x slower.

My first computer was a Coleco/ADAM with a tape drive.

My first “IBM Compatible” computer was a 286 with a 40 MB hard drive. It also had a CD-ROM which at the time was this whole huge futuristic thing. We had an entire encyclopedia on a CD! They could hold hundreds of megabytes! More storage than I could ever need!

I have a camera drone with a 128 gigabyte micro SD card in it. The card is smaller than my fingernail. I freely admit that having that much storage on something so small just doesn’t add up in my brain. I know its possible, it works, I’m just someone who grew up dealing with megabytes being something that took up a lot of physical space and now something a few orders of magnitude larger could get lost in my pocket.

I remember watching ZDTV/TechTV in the 90s and Leo Laporte referring to an 8GB drive as “an entire universe in there”.

My buddy’s first HDD for his Amiga held 20mb. It was the size of a toaster, and cost something like $400. Now, a chip the size of my fingernail can hold a terabyte, and costs less than that HDD. I know it’s slowed down a lot, but I really wonder where we’ll be in another forty years.

Americans like BIG

I’d prefer they spend time optimizing CPU, GPU, RAM usage instead of storage size.

These are rarely mutually exclusive. Rather the opposite, as these textures and whatnot need to be pumped through RAM and VRAM via the CPU.

In third world, internet is expensive, so shit like this would cause alot of people to have to download it over more than a month

Actually internet is cheaper in third world than first world. But PC hardware is expensive.

Obviously depends on the country but in general Internet is the worst, there’s a reason the piracy scene is so big in India, although maybe it’s just because the servers will be further on average.

Diablo 2 lod installed: 3 gb Diablo 2 resuracted 25 gb

Is 4k images consuming that much more space?

No, but raw audio and high res 3d models are

3d models consist of images right? Coordinates for the image does not take up much?

Yes but also more polygons for more detailed models. Which… is more space but it can’t be that much lol idk tho

It depends how they store the models, if they’re using normal mapping (which they probably are) they will need to store the following in a file: Position (x,y,z) normal (x,y,z) texturecoordinates (u,v) tangent (x,y,z) bitangent (x,y,z). For each vertex, assuming that they’re using a custom binary format and 32-bit (4 byte) floats, 56 bytes per vertex. The Sponza model which is commonly used for testing has around 1.9 million vertices: in our hypothetical format at least, 106.4MB for the vertices. But we also have to store the indices which are a optimisation to prevent the repetition on common vertices. Sponza has 3.9 million triangles, 3 32-bit integers per triangle gets us an additional 46.8 MB. So using that naeive format which should be extremely fast to load and alot of models, 3D model data is no insignificant contributor to file size.

That’s nothing compare from ark

MORE!

The uncompressed sizes make for a much faster install. Repackers like FitGirl compress the duck out of the game files to make the download smaller but it takes ages to install the games afterwards.

No it’s fine, I’ll just buy another external hard drive