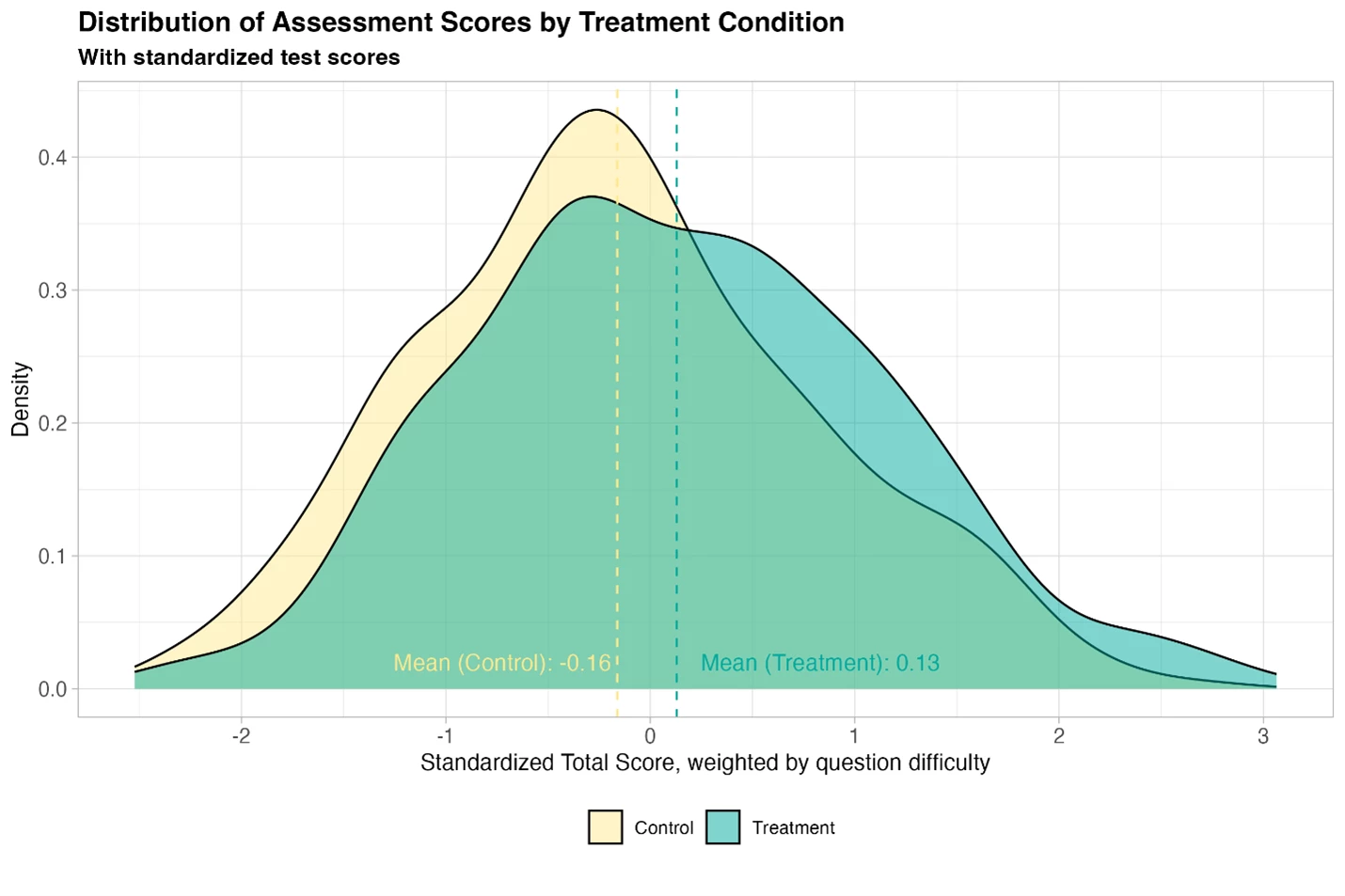

Is this seriously what they call overwhelmingly positive. Even if they had the sample size to claim this difference is significant, it does look quite small given the variation. Mind you these kids just attended a lot of extra studying hours compared to other kids who did not enroll. Did they even try to control for this?

This %100 looks like someone’s attempt to sell an AI product to third world country governments.

I’ll need the full peer-reviewed paper which, based on this article, is still pending? Until then, based on this blog post, here’s my thoughts as someone who’s education adjacent but scientifically trained.

Most critically, they do not state what the control arm was. Was it a passive control? If so, of course you’ll see benefits in a group engaged in AI tutoring compared to doing nothing at all. How does AI tutoring compare to human tutoring?

Also important, they were assessing three areas - English learning, Digital Skills, and AI Knowledge. Intuitively, I can see how a language model can help with English. I’m very, very skeptical of what they define as those last two domains of knowledge. I can think of what a standardized English test looks like, but I don’t know what they were assessing for the latter two domains. Are digital skills their computer or typing proficiency? Then obviously you’ll see a benefit after kids spend 6 weeks typing. And “AI Knowledge”??

ETA: They also show a figure of test scores across both groups. It appears to be a composite score across the three domains. How do we know this “AI Knowledge” or Digital Skills domain was not driving the effect?

ETA2: I need to see more evidence before I can support their headline claim of huge growth equal to two years of learning. Quote from article: “When we compared these results to a database of education interventions studied through randomized controlled trials in the developing world, our program outperformed 80% of them, including some of the most cost-effective strategies like structured pedagogy and teaching at the right level.” Did they compare the same domains of growth, ie English instruction to their English assessment? Or are they comparing “AI Knowledge” to some other domain of knowledge?

But, they show figures demonstrating improvement in scores testing these three areas, a correlation between tutoring sessions and test performance, and claim to see benefits in everyday academic performance. That’s encouraging. I want to see overall retention down the line - do students in the treatment arm show improved performance a year from now? At graduation? But I know this data will take time to collect.

“How does AI tutoring compare to human tutoring?” Thats the first question that came to my mind!

In the real world, it depends on if humans are available to do the tutoring. Even if human tutors are better than AI, AI is better than no tutor.

Personally, I don’t think the evidence has established an AI tutor is better than no tutor. Maybe for English, certainly not for Math, Science, History, Art, or any other subjects.

LLMs can be wrong - so can teachers, but I’d bet dollars to donuts the LLM is wrong more often.

This is true. I was just thinking in the case of the study, but /any/ help when needed is better than no help.

Not really because humans are expensive and so only the rich can afford them. My kids get half an hour a week of music tutoring and it costs me time toeget them there plus the cost of the teacher. An ai could be at all practice times as well for when there is a teaching moment.

Unfortunately, I don’t think we’re close to getting an AI music tutor. From a practical standpoint, a large language model can’t interpret music.

From an ethos standpoint, allowing AI to train a musician breaks my heart.

I could see a world where an AI teaches the mechanics of music, but is not relied on for evaluation of the students imagination in applying the mechanics.

When I was in art classes I wanted the class to teach me the techniques I did not know, but I was usually disappointed.

Like my life drawing classes would be me drawing images, and then getting judged on /what I already knew how to do/.

What I felt I would have benefited from was more along the lines of “here is three different shading techniques and how they can be used” or “here are three ways to use oil paints you’ve not used before.”

I always had ideas of images to create, what I could have benefited from was intros to more tools and ways to use those tools.

I could see an AI being able to do that for students of the arts.

That is a good - but different - point.

Though a large part of tutoring music is just “play that again” said in a way that the music is played again.

They just discovered that kids learn about AI and computers by them using AI and computers.

There’s so much to legitimately worry about with AI, that we often lose sight of its potential good.

The moment you become truly aware of how often ai is incorrect is the moment you are ready to use it properly.

I do hope we have started a long study for the short and long term influence of llms on critical thinking skills.

AI juste needs grounding. If you ask gpt4 to look online before answering you (giving it a database of reliable info), it will always be correct. With this technique AI can be a great teacher.

gpt4 is not capable of searching online. It’s trained on a fixed database with no internet access.

OpenAI gpt4 can’t?!? I am fearly certainly it can, considering I use this feature daily.

I’m pretty worried about the massive acceleration of our environmental destruction of the planet that it causes, so I hope they turn it to getting us the fuck off this rock pretty soon then.

Capitalism will fix this (bizarrely). Making AI more efficent (read more profitable) is on LOTS of peoples minds right now.

Whether it’s more efficent chips, better algorithms or whatever, rest assured, LOTS of effort is going into it.

Our environment will still be destroyed, but it won’t be AI that does it, just boring greed

Capitalism will fix this

I won’t hold my breath.

I’m worried about ideological capture. Since teachers are individuals, it’s fairly hard to uniformly push extreme views - there’s always gonna be some resistance.

That no longer exists with AI. Whoever produces it has full control over what it says, knows and “thinks”.

Of course the World Bank is pushing this.