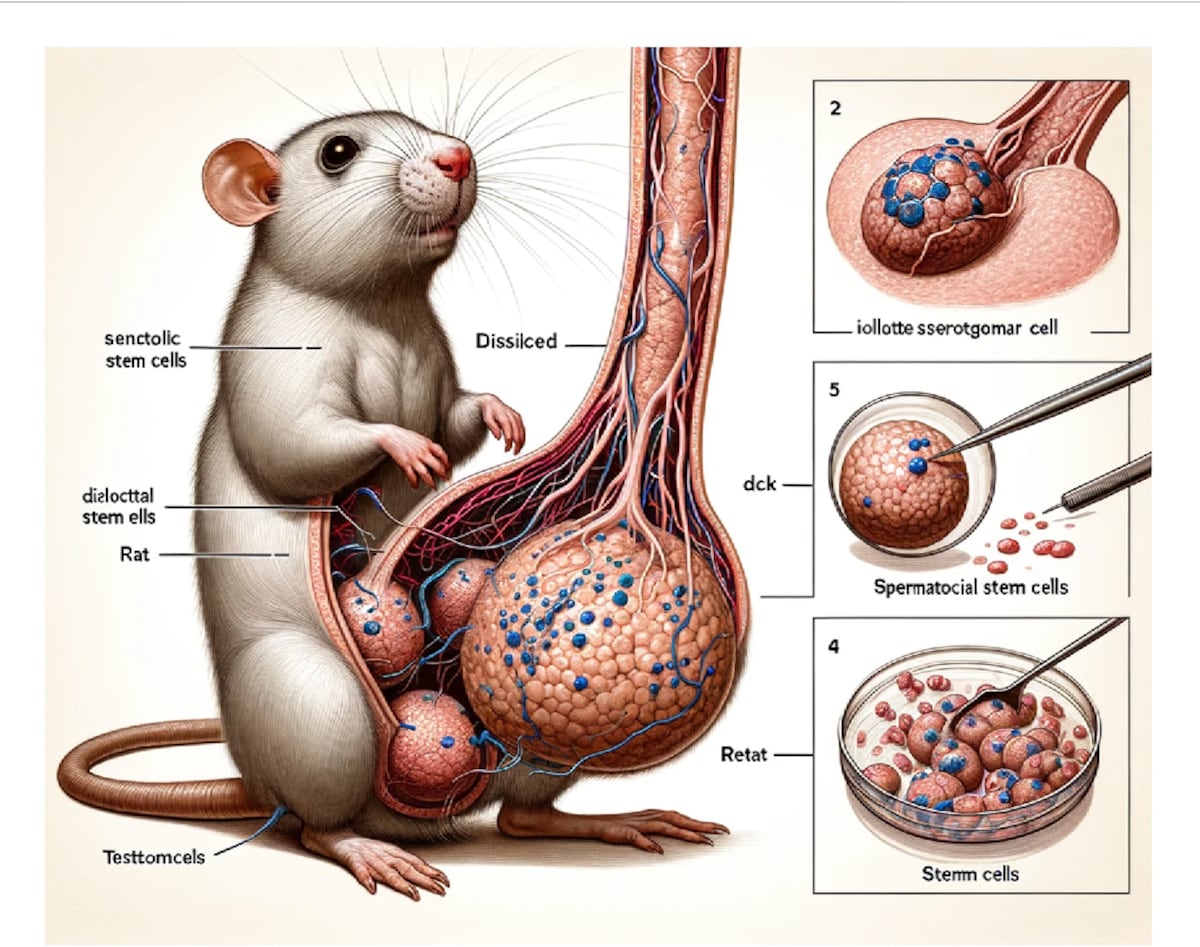

I like how the dick rat has become a beacon of scientific malpractice.

“Right now it is impossible to know how big this gray area is, because scientific journals do not require authors to declare the use of ChatGPT, there is very little transparency […]”

This might help to get statistics on how many people eg. use ChatGPT to brush up language, but I doubt anybody who uses the unedited output of an LLM to actually “write” large parts of an article is likely to declare they did it

I’m using ChatGPT to help me simplify the very terse language of an academic paper, and I must say I’m super unimpressed so far. I don’t understand how people could possibly use it to write anything of substance given the output it generates; it generates redundant, overlapping, and superficial responses that need to be heavily edited to make sense. I’m pretty much better off trying to decipher the paper by myself.

I feel the same way, but you say it very nicely.

I don’t know how to express this sentiment without sounding pretentious and judgy AF.

It can’t write much of substance. The only people using it in science for anything more than fluff are people who don’t speak English well or who have no business writing papers. I sympathize with the former, but I don’t understand why those folks wouldn’t just either publish in a language they speak or get an English-speaking coauthor to help write in English. I wouldn’t ever use it to write an article. Even editing, it tends to butcher scientific nuance.

It is good at writing fluff though, which is helpful for things like letters of recommendation for undergraduates.

I don’t understand why those folks wouldn’t just either publish in a language they speak or get an English-speaking coauthor to help write in English

Major journals are in English, and “just getting an English speaking coauthor” might not be as easy as you make it sound – especially considering that using an LLM to fix grammar is definitely going to be easier and faster

I’m in science. It isn’t difficult to get an English speaking coauthor. Going to an LLM is easier and faster, sure, but if someone can’t understand the output then they have no idea if their text is being translated correctly.

Oh yeah that’s absolutely true, I was thinking more of people who aren’t fluent in English and would like to make their text a bit more natural or fluent, which is what I’ve done

Ah, I would consider that fluff, which is okay in my book. I don’t use it for writing, personally, but what I tell my students is that if it’d be fine for a friend to do the thing and not get coauthorship, it’s fine to use AI for that (provided you acknowledge it, as you would a friend who provides some helpful comments on a draft). Proofing and suggesting minor stylistic things fall under that umbrella IMO.

LLMs can only copy information, they don’t evaluate it. So they end up with a quality level around the median of the input. And since most content is pretty crappy, you end up with mediocre crappy output.

Thankfully, there’s very high demand for mediocre crap (which is why there’s so much of it).

Well, of course if you want substance…

I’m not a native English speaker and I occasionally use GPT to basically do some light editing when writing longer English texts, so brushing up grammar etc., but I’ve always vetted the results and often changed them a bit so they sound more like “me” if that makes any sense. GPT’s also been very handy with doing quick’n’dirty translations from Finnish to English (which eg. Google Translate is notoriously bad at), but I always make sure the result isn’t complete dada, and I only do that in pretty trivial cases like if I want to quote a piece of some Finnish article but in an English-speaking Lemmy community.

Like you I’ve tried using GPT to summarize academic texts but I’ve also not been too impressed with the results, but it’s been a while. While there’s a lot of unwarranted hype around LLMs, I’ve definitely found them useful for a bunch of different tasks, but I understand their limitations so I rarely eg. try to get factual information out of them (at least without very thorough verification)

ChatGPT is now at the intelligence of a sleep deprived undergrad, using the word “terrible” five times in one run-on sentence the morning before the paper is due

Soon, ChatGPT will train itself on these articles leading to it gaining a stronger and stronger probability of using words like ‘meticulous’ and ‘commendable’. Soon all output will be non stop repetition of ‘commendable’.

I’m wondering if this is happening with people as well. If some of these could be written by humans who subconsciously picked up AI phrasing by reading to much AI text.

Commendaticulous you mean

Using chatgpt is not bad in itself, but then you have to make sure to meticulously read the generated article to see if it’s all correct. It’s commendable people want to make sure it’s easy to read and not mostly changrish or so. An article is just for the transfer of knowledge anyway, who or what writes it doesn’t matter as long as the facts are correct.

Using chat GPT doesn’t change chat GPT.

A language model system learn during a phase that’s called “training”. After the training the system doesn’t learn. We, as users, interact with it only after training.

Edit : Oops ! My comment above was out of context because I didn’t read the article … only the title … and I misinterpreted it as meaning that “chat GPT overused certain words” which is not what the article says.You think OpenAI has just completely stopped trawling the internet for content? Nobody knows how often they update chatGPT, it’s likely a lot.

Oops, my bad … so I corrected my above comment.

A small nuanced correction to your comment about language models being pre-trained. That’s true for GPT (its the P), and all modern high performance language models since 2017, but it was actually not in fashion before that period, and may not be again at some point in the future.