- 98 Posts

- 318 Comments

1·1 day ago

1·1 day agoYou cannot run the perchance AI locally, because it’s all on the server. You can download perchance generators but the AI aspects of those generators will not work when run locally.

So you’d need to make your own thing to interface with whatever AI generators you’ve got on your machine, yourself.

2·1 day ago

2·1 day agoUse a different emotion in the prompt I guess?

Something to remember though is that all words affect the generated image. So unless you’ve actually locked in how the character looks with other words in the prompt, using a different emotion word may change some aspect of how the character looks as well.

You could also use the BREAK keyword, which cuts the string of meaning. So then you can have one word relating to one part of the prompt but not other parts of the prompt. The classic example is

blue dress yellow hatcan produce various elements in the image being blue or yellow… vsblue dress BREAK yellow hatwhich would make only the dress blue, and only the hat yellow. (In theory.)

1·1 day ago

1·1 day agoI don’t know, seems to work okay for me. Could you give me a link to what you’re working on so I can see it not working?

Normally this is kinda redundant:

quality = [qua = rarityTier]. You could just doqua = [rarityTier]instead.Know that setting it to

rarityTierwill just reference that list object, not a selected item from that object. If you wanted to select a random item and find the odds of that, you’d want to userarityTier.selectOneinstead.

2·1 day ago

2·1 day agoPerchance doesn’t really have documentation, I wouldn’t even call it that 😅 By no means is there any exhaustive documentation on pretty much anything to do with Perchance. Not written by the dev anyway. It’s all very temp and WIP and partial and incomplete.

I’ve made my own documentation for it, to my own standards. But haven’t touched things like AI generation. I would just send people to the page of whatever model/tech I’m using and let them do their own research there, instead of trying to cover everything myself in a document.

I think this is just how Stable Diffusion works. There’s always some “noise” to the system, even using the same seed.

As I said, bringing it up is totally fine. And they should amend it to be more accurate. And looks like they have, from the comment they left here.

I didn’t say it’s “valid to put in as documentation.” Just that I know what happened. It happened because a) the dev is not a documentation writer, b) is making this platform up as they go along (I’m sure they’d agree), and that’s their passion, not writing about it, and c) they probably wrote it in a hurry so they could move on to something else that interested them more and it was good enough so they called it a day. Oh, and it’s not really to the level of “documentation” of the AI generator; I don’t think that was the intent necessarily.

This isn’t a professional outfit, know what I mean? 😅 So basically… these things happen. 🤷 Also… yes, helping them pick up on these issues is good; they just need our help to do that.

Don’t read my response as “there’s nothing wrong with what you pointed out.” But responding to the idea that it was written to be “false and misleading.” It wasn’t written to be false and misleading, it just turned out that way. 😅 Like a typo in a book wasn’t put there maliciously, it just wound up being there, and the process of editors and proofreaders it went through didn’t pick up on it before now. Nothing on perchance has been through editors and proofreaders even–so you’re going to see mistakes like this. That were not maliciously or purposefully false or misleading. They’re just simple mistakes.

On top of that, maybe that wasn’t what you intended to come across, but just the wording made it sound accusatory like that. So naturally, if that perceived accusation is not true, you’re going to see some defense against such an accusation. I think that’s all that’s going on here.

That’s what I was saying about “inaccurate.” That word doesn’t have any connotation of wrongdoing or malintent. “False” and “misleading” do, however. See what I mean? If not that’s fine. Just explaining how what you thought I was saying about acceptability of the problem isn’t accurate either. 😜

1·2 days ago

1·2 days agoYeah. Just the way you wrote your post it sounded like some heinous, egregious sin, that the write of that page is lying to us or something.

It’s not 100% accurate, but an understandable simplification–in most cases the differences are incredibly minor. They could edit it to be more accurate, fair enough. But it’s not as evil or outrageous as it seemed reading your post 😅

2·2 days ago

2·2 days agoHow would you reword it? “The exact same seed and prompt and negative prompt produces highly-similar images.” Something like that?

2·3 days ago

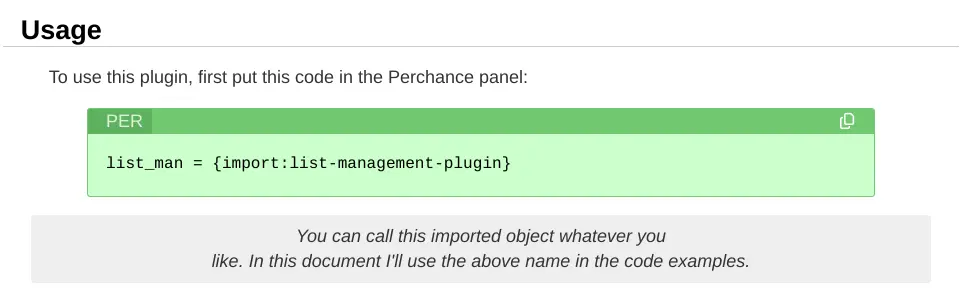

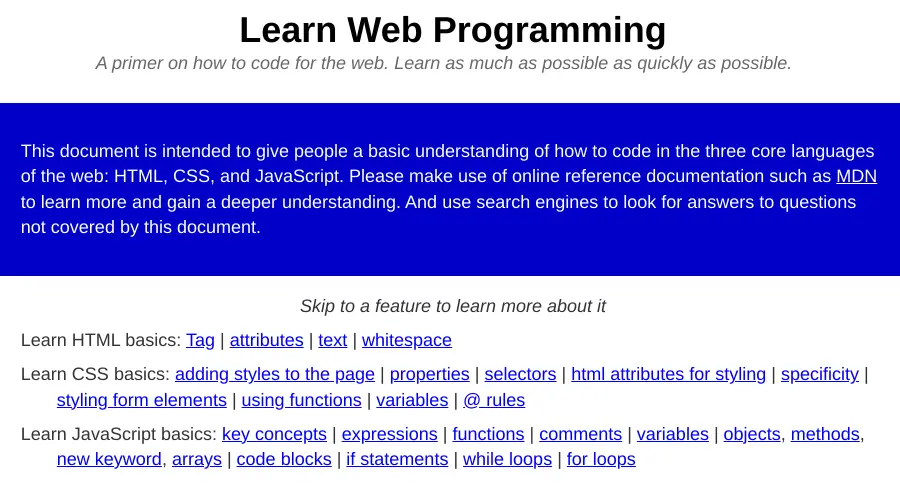

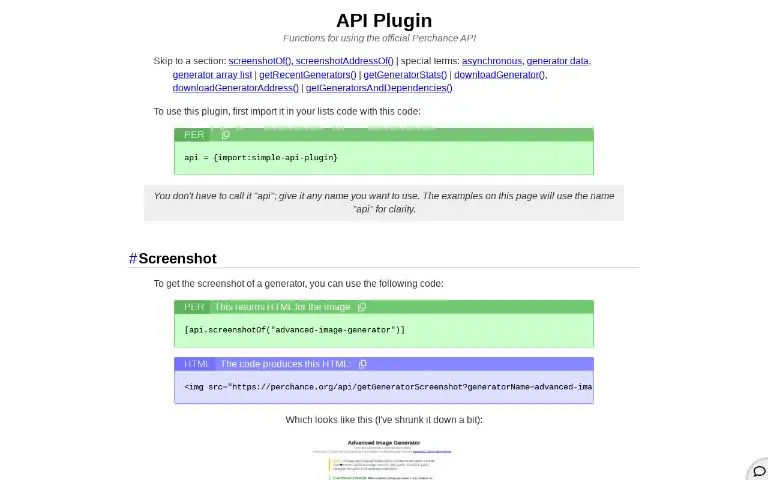

2·3 days agoI made one not long ago actually… https://perchance.org/perchance-reference

3·4 days ago

3·4 days agoYou should use the dev tools in your browser to figure this out. In the sources tab of dev tools, turn on “break on caught error” and see if you can get to the line that looks like that one it printed out in that message. That’ll help you figure out what code is breaking.

Presumably it’s in the dynamic importer plugin, so you could just look in that plugin’s code on the site, and see where that is, and find out what’s gone wrong that way.

2·4 days ago

2·4 days agoI don’t know what “the AI button in the editor” means, so you might want to clarify by editing your post. Up to you.

1·4 days ago

1·4 days agoApparently mentioning a username in the post does not send them a notification. You have to do it in a comment like this @perchance@lemmy.world. (I don’t know why it’s like this, but that’s what I’ve been told.)

1·4 days ago

1·4 days agoJust so you know… even if there is no button for this, you can simply go to perchance.org/resources. Or bookmark it yourself and always have it available. And move that bookmark into your bookmarks bar of the browser to always see that as a button. Or start typing it and if it’s bookmarked or in your history it’ll come up and let you select it automatically.

Maybe they’ll add it back or maybe they won’t, but in the meantime you can still use it–just so you know.

2·4 days ago

2·4 days ago(For anyone else who sees this, this has been resolved through the Reddit post.)

3·6 days ago

3·6 days agoThe two sites have nothing to do with each other. This is a forum within the Lemmy platform–like a subreddit within the reddit platform. Perchance is a whole separate site. You can sign up to Perchance using the same email and password, but they are separate accounts on separate sites.

2·6 days ago

2·6 days agoI’ll try not to think about what text this thing is spitting out–yikes!

Anyhow… I see no errors at all.

1·6 days ago

1·6 days agoCool cool, will do 👍

1·6 days ago

1·6 days agoIt is a bit “sharey” to be fair 😅 The tooltip helps in this case.

“These”? “Do”? Could you explain what you’re talking about more please?